Truthlikeness

Truth is the aim of inquiry. Nevertheless, some falsehoods seem to realize this aim better than others. Some truths better realize the aim than other truths. And perhaps even some falsehoods realize the aim better than some truths do. The dichotomy of the class of propositions into truths and falsehoods should thus be supplemented with a more fine-grained ordering — one which classifies propositions according to their closeness to the truth, their degree of truthlikeness or verisimilitude. The logical problem of truthlikeness is to give an adequate account of the concept and to explore its logical properties. Of course, the logical problem intersects with problems in both epistemology and value theory.

In §1 we will examine the basic assumptions which generate the logical problem of truthlikeness, which in part explain why the problem emerged when it did. Attempted solutions to the problem quickly proliferated, but it has become customary to gather them together into two broad lines of attack. The first, the content approach (§2), was initiated by Popper in his ground-breaking work. However, because it treats truthlikeness as a function of just two variables, neither Popper's original proposals, nor subsequent attempts to elaborate them, can fully capture the richness of the concept. The second, the likeness approach (§3), takes the likeness in truthlikeness seriously. Although it promises to catch more of the complexity of the concept than does the content approach, it faces two serious problems: whether the approach can be suitably generalized to complex examples (§5), and whether it can be developed in a way that is translation invariant (§6). This raises the question of whether there might be a hybrid approach (§5) incorporating the best features of the content and likeness approaches. Recent results suggest that hybridism has special difficulties of its own.

- 1. The Logical Problem

- 2. The Content Approach

- 3. The Likeness Approach

- 4. Are There Hybrid Approaches?

- 5. Translation Invariance

- Bibliography

- Other Internet Resources

- Related Entries

1. The Logical Problem

Truth, perhaps even more than beauty and goodness, has been the target of an extraordinary amount of philosophical dissection and speculation. This is unsurprising. After all, truth is the primary aim of all inquiry and a necessary condition of knowledge. And yet (as the redundancy theorists of truth have emphasized) there is something disarmingly simple about truth. That the number of planets is ten is true just in case, well, … the number of planets is ten. By comparison with truth, the more complex, and much more interesting, concept of truthlikeness has only recently become the subject of serious investigation. The proposition the number of planets in our solar system is ten may be false, but quite a bit closer to the truth than the proposition that the number of planets in our solar system is ten billion. Investigation into truthlikeness began with a tiny trickle of activity in the early nineteen sixties; became something of a torrent from the mid seventies until the late eighties; and is now a steady current.Truthlikeness is a relative latecomer to the philosophical scene largely because it wasn't until the latter half of the twentieth century that mainstream philosophers gave up on the Cartesian goal of infallible knowledge. The idea that we are quite possibily, even probably, mistaken in our most cherished beliefs, that they might well be just false, was mostly considered tantamount to capitulation to the skeptic. By the middle of the twentieth century, however, it was clear that natural science postulated a very odd world behind the phenomena, one rather remote from our everyday experience, one which renders many of our commonsense beliefs, as well as previous scientific theories, strictly speaking, false. Further, the increasingly rapid turnover of scientific theories suggested that, far from being established as certain, they are ever vulnerable to refutation, and typically are eventually refuted, to be replaced by some new theory. Taking the dismal view, the history of inquiry is a history of theories shown to be false, replaced by other theories awaiting their turn at the guillotine.

Realism affirms that the primary aim of inquiry is the truth of some matter. Epistemic optimism affirms that the history of inquiry is one of progress with respect to its primary aim. But fallibilism affirms that, typically, our theories are false or very likely to be false, and when shown to be false they are replaced by other false theories. To combine all three ideas, we must affirm that some false propositions better realize the goal of truth — are closer to the truth — than others. So the optimistic realist who has discarded infallibilism has a problem — the logical problem of truthlikeness.

While a multitude of apparently different solutions to the problem have been proposed, it is now standard to classify them into two main approaches — the content approach and the likeness approach.

2. The Content Approach

Karl Popper was the first philosopher to take the logical problem of truthlikeness seriously enough to make an assay on it. This is not surprising, since Popper was also the first prominent realist to embrace a radical fallibilism about science while trumpeting the epistemic superiority of the enterprise.According to Popper, Hume had shown not only that we can't verify any interesting theory, we can't even render it more probable. Luckily, there is an asymmetry between verification and falsification. While no finite amount of data can verify or probabilify an interesting scientific theory, they can falsify the theory. According to Popper, it is the falsifiability of a theory which makes it scientific. In his early work, he implied that the only kind of progress an inquiry can make consists in falsification of theories. This is a little depressing, to say the least. What it lacks is the idea that a succession of falsehoods can constitute genuine cognitive progress. Perhaps this is why, for many years after first publishing these ideas in his 1934 Logik der Forschung Popper received a pretty short shrift from the philosophers. If all we can say with confidence is “Missed again!” and “A miss is as good as a mile!”, and the history of inquiry is a sequence of such misses, then epistemic pessimism follows. Popper eventually realized that this naive falsificationism is compatible with optimism provided we have an acceptable notion of verisimilitude (or truthlikeness). If some false hypotheses are closer to the truth than others, if verisimilitude admits of degrees, then the history of inquiry may turn out to be one of steady progress towards the goal of truth. Moreover, it may be reasonable, on the basis of the evidence, to conjecture that our theories are indeed making such progress even though it would be unreasonable to conjecture that they are true simpliciter.

Popper saw very clearly that the concept of truthlikeness is easily confused with the concept of epistemic probability, and that it has often been so confused. (See Popper, 1963 for a history off the confusion). Popper's insight here was undoubtedly facilitated by his deep, and largely unjustified, antipathy to epistemic probability. His starkly falsificationist account favors bold, contentful theories. Degree of informative content varies inversely with probability — the greater the content the less likely a theory is to be true. So if you are after theories which seem, on the evidence, to be true, then you will eschew those which make bold — that is, highly improbable — predictions. On this picture, the quest for theories with high probability is simply wrongheaded. Certainly we want inquiry to yield true propositions, but not any old truths will do. A tautology is a truth, and as certain as anything can be, but it is never the answer to any interesting inquiry outside mathematics and logic. What we want are deep truths, truths which capture more rather than less, of the whole truth.

What, then, is the source of the widespread conflation of truthlikeness with probability? Probability — at least of the epistemic variety — measures the degree of seeming to be true, while truthlikeness measures degree of being similar to the truth. Seeming and being similar might at first strike one as closely related, but of course they are rather different. Seeming concerns the appearances whereas being similar concerns the objective facts, facts about similarity or likeness. Even more important, there is a difference between being true and being the truth. The truth, of course, has the property of being true, but not every proposition that is true is the truth in the sense required by the aim of inquiry. The truth of a matter at which an inquiry aims has to be the complete, true answer. Thus there are two dimensions along which probability (seeming to be true) and truthlikeness (being similar to the truth) differ radically.

To see this distinction clearly, and to articulate it, was one of Popper's most significant contributions, not only to the debate about truthlikeness, but to philosophy of science and logic in general. As we will see, however, his deep antagonism to probability combined with his passionate love affair with boldness was both a blessing and a curse. The blessing: it led him to produce not only the first interesting and important account of truthlikeness, but to initiate a whole approach to the problem — the content approach (see Oddie 1986a, Zwart 2001). The curse, as is now almost universally recognized: content alone is insufficient to characterize truthlikeness.

Popper made the first assay on the problem in his famous collection Conjectures and Refutations. First, let a matter for investigation be circumscribed by a language L adequate for discussing it. (Popper was a great admirer of Tarski's assay on the concept of truth and strove to model his theory of truthlikeness on Tarski's theory.) The world induces a partition of sentences of L into those that are true and those that are false. The set of all true sentences is thus a complete true account of the world, as far as that investigation goes. It is aptly called the Truth, T. T is the target of the investigation couched in L. It is the theory that we are seeking, and, if truthlikeness is to make sense, theories other than T, even false theories, come more or less close to capturing T.

T, the Truth, is a theory only in the technical Tarskian sense, not in the ordinary everyday sense of that term. It is a set of sentences closed under the consequence relation: a consequence of some sentences in the set is also a sentence in the set. T may not be finitely axiomatisable, or even axiomatisable at all. Where the language involves elementary arithmetic it follows (from Gödel's incompleteness theorem) that T won't be axiomatisable. However, it is a perfectly good set of sentences all the same. In general we will follow the Tarski-Popper usage here and call any set of sentences closed under consequence a theory, and we will assume that each proposition we deal with is identified with the theory it generates in this sense. (Note that when theories are classes of sentences, theory A logically entails theory B just in case B is a subset of A.)

The complement of T, the set of false sentences F, is not a theory even in this technical sense. Since falsehoods always entail truths, F is not closed under the consequence relation. (This is part of the reason we have no complementary expression like the Falsth. The set of false sentences does not describe a possible alternative to the actual world.) But F too is a perfectly good set of sentences. The consequences of any theory A that can be formulated in L will thus divide its consequences between T and F. Popper called the intersection of A and T, the truth content of A (AT), and the intersection of A and F, the falsity content of A (AF). Any theory A is thus the union of its non-overlapping truth content and falsity content. Note that since every theory entails all logical truths, these will constitute a special set, at the center of T, which will be included in every theory, whether true or false.

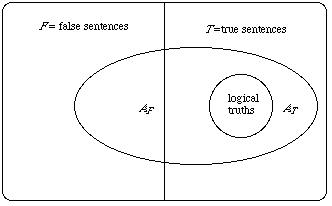

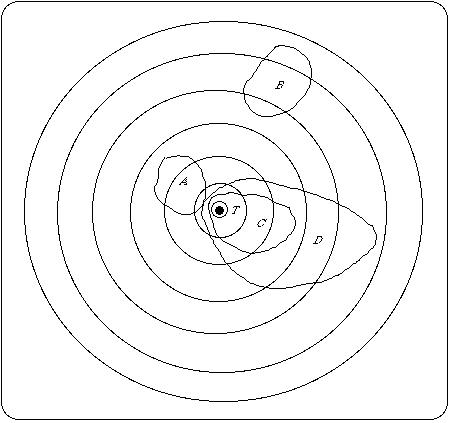

A false theory will cover some of F, but because every false theory has true consequences, it will also overlap with some of T (Diagram 1).

Diagram 1: Truth and falsity contents of false theory A

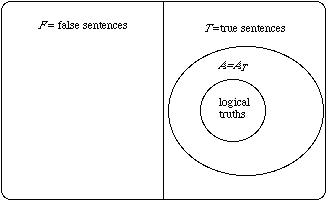

A true theory, however, will only cover T (Diagram 2):

Diagram 2: True theory A is identical to its own truth content

Amongst true theories, then, it seems that the more true sentences entailed the closer we get to T, hence the more truthlike. Set theoretically that simply means that, where A and B are both true, A will be more truthlike than B just in case B is a proper subset of A (which for true theories means that BT) is a proper subset of AT). Call this principle: the value of content for truths.

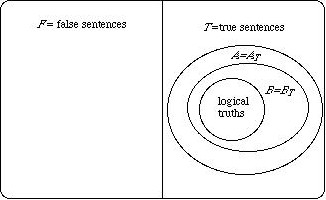

Diagram 3: True theory A has more truth content than true theory B

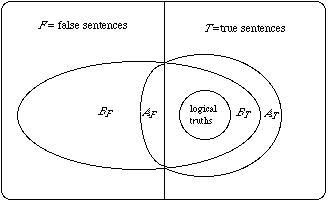

This essentially syntactic account of truthlikeness has some nice features. It induces a partial ordering of truths, with the whole Truth T at the top of the ordering: T is closer to the Truth than any other true theory. The set of logical truths is at the bottom: further from the Truth than any other true theory. In between these two extremes, true theories are ordered simply by logical strength: the more logical content, the closer to the Truth. Since probability varies inversely with logical strength, amongst truths the theory with the greatest truthlikeness (T) must have the smallest probability, and the theory with the largest probability (the logical truth) is the furthest from the Truth. Popper made a bold and simple generalization of this. Just as truth content (coverage of T) counts in favour of truthlikeness, falsity content (coverage of F) counts against. In general then, a theory A is closer to the truth if it has more truth content without engendering more falsity content, or has less falsity content without sacrificing truth content (diagram 4):

Diagram 4: False theory A closer to the Truth than false theory B

The generalization of the truth content comparison also has some nice features. It preserves the comparisons of true theories mentioned above. The truth content AT of a false theory A (itself a theory in the Tarskian sense) will clearly be closer to the truth than A (diagram 1). More generally, a true theory A will be closer to the truth than a false theory B provided A's truth content exceeds B's.

Despite these nice features the account suffers the following fatal flaw: it entails that no false theory is closer to the truth than any other. This incommensurability result was proved independently by Pavel Tichý and David Miller (Miller 1974, and Tichý 1974). It is instructive to see why. Let us suppose that A and B are both false, and that A's truth content exceeds B's. Let a be a true sentence entailed by A but not by B. Let f be any falsehood entailed by A. Since A entails both a and f the conjunction, a&f is a falsehood entailed by A, and so part of A's falsity content. If a&f were also part of B's falsity content B would entail both a and f. But then it would entail a contrary to the assumption. Hence a&f is in A's falsity content and not in B's. So A's truth content cannot exceeds B's without A's falsity content also exceeding B's. Suppose now that B's falsity content exceeds A's. Let g be some falsehood entailed by B but not by A, and let f, as before, be some falsehood entailed by A. The sentence f ⊃ g is a truth, and since it is entailed by g, is in B's truth content. If it were also in A's then both f and f ⊃ g would be consequences of A and hence so would g, contrary to the assumption. Thus A's truth content lacks a sentence, f ⊃ g, which is in B's. So B's falsity content cannot exceeds A's without B's truth content also exceeding A's. The relationship depicted in diagram 4 simply cannot obtain.

It is tempting at this point (and Popper was so tempted) to retreat to something like the comparison of truth contents alone. That is to say, A is as to the truth as B if A entails all of B's truth content, and A is closer to the truth than B just in case A is at least as close as B, and B is not at least as close as A. Call this the Simple Truth Content account.

This Simple Truth Content account preserves Popper's ordering of true propositions. However, it also deems a false proposition the closer to the truth the stonger it is. (Call this principle: the value of content for falsehoods.) According to this principle, since the false proposition that there are seven planets, and all of them are made of green cheese is logically stronger than the false proposition that there are seven planets the former is closer to the truth than the latter. So, once we know a theory is false we can be confident that tacking on any old arbitrary proposition, no matter how misleading it is, will lead us inexorably closer to the truth. Amongst false theories, brute logical strength becomes the sole criterion of a theory's likeness to truth. This is the brute strength objection.

After the proven failure of Popper's proposal a number of variations on the content approach have been aired. A number stay within Popper's essentially syntactic paradigm, comparing classes of true and false sentences (e.g. Schurz and Weingarter 1987, Newton Smith 1981). Others (following a lead from the likeness approach) make the switch to a more semantic paradigm, searching for a plausible theory of distance between the semantic content of sentences, construing these semantic contents as classes of possibilities. A variant of this approach takes the class of models of a language as a surrogate for possible states of affairs (Miller 1978a). The other utilizes a semantics of incomplete possible states like those favored by structuralist accounts of scientific theories (Kuipers 1987b). The idea which these share in common is that the distance between two propositions is measured by the symmetric difference of the two sets of possibilities. Roughly speaking, the larger the symmetric difference, the greater the distance between the two propositions. Symmetric differences might be compared qualitatively - by means of set-theoretic inclusion - or quantitatively, using some kind of probability measure.

If the truth is taken to be given by a complete possible world (or perhaps represented by a unique model) then we end up with results very close to the truncated version of Popper's account, comparing by truth contents alone (Oddie 1978). In particular, false propositions are closer to the truth the stronger they are. However, if we take the structuralist approach then we will take the relevant possibilities to be “small” states of affairs — small chunks of the world, rather than an entire world — and then the possibility of more fine-grained distinctions between theories opens up. A rather promising exploration of this idea can be found in Volpe 1995.

The fundamental problem with the original content approach lies not in the way it has been articulated, but rather in the basic underlying assumption: that truthlikeness is a function of just two variables — content and truth value. This assumption has a number of rather problematic consequences.

Two things follow if truthlikeness is a function just of the logical content of a proposition and of its truth value. Firstly, any given proposition A can have only two degrees of verisimilitude: one in case it is false and the other in case it is true. This is obviously wrong. A theory can be false in very many different ways. The proposition that there are eight planets is false whether there are nine planets or a thousand planets, but its degree of truthlikeness is much higher in the first case than in the latter. As we will see below, the degree of truthlikness of a true theory may also vary according to where the truth lies. Secondly, if we combine the value of content for truths and the value of content for falsehoods, then if we fix truth value, verisimilitude will vary only according to amount of content. So, for example, two equally strong false theories will have to have the same degree of verisimilitude. That's pretty far-fetched. That there are ten planets and that there are ten billion planets are (roughly) equally strong, and both are false in fact, but the latter seems much further from the truth than the former.

Finally, how might strength determine verisimilitude amongst false theories? There seem to be just two plausible candidates: that verisimilitude increases with increasing strength, or that it decreases with increasing strength. Both proposals are at odds with attractive judgements and principles. One does not necessarily make a step toward the truth by reducing the content of a false proposition. The proposition that the moon is made of green cheese is logically stronger than the proposition that either the moon is made of green cheese or it is made of dutch gouda, but the latter hardly seems a step towards the truth. Nor does one necessarily make a step toward the truth by increasing the content of a false theory. The false proposition that all heavenly bodies are made of green cheese is logically stronger than the false proposition all heavenly bodies orbiting the earth are made of green cheese but it doesn't seem to be an improvement.

3. The Likeness Approach

In the wake of the collapse of Popper's articulation of the content approach two philosophers, working quite independently, suggested a radically different approach: one which takes the likeness in truthlikeness seriously (Tichý 1974, Hilpinen 1976). This shift from content to likeness was also marked by an immediate shift from Popper's essentially syntactic approach to a semantic approach, one which trafficks in the semantic contents of sentences.Traditionally the semantic contents of sentences have been taken to be non-linguistic, or rather non-syntactic, items — propositions. What propositions are is, of course, highly contested, but most agree that a proposition carves the class of possibilities into two sub-classes — those in which the proposition is true and those in which it is false. Call the class of worlds in which the proposition is true its range. Some have proposed that propositions be identified with their ranges (for example, David Lewis, in his 1986). This identification is implausible since, for example, the informative content of 7+5=12 seems distinct from the informative content of 12=12, which in turn seems distinct from the informative content of Gödel's first incompleteness theorem - and yet all three have the same range. They are all true in all possible worlds. Clearly if semantic content is supposed to be sensitive to informative content, classes of possible worlds will not be discriminating enough. We need something more fine-grained for a full theory of semantic content. Despite this, the range of a proposition is certainly an important aspect of informative content, and it is not clear that truthlikeness should be sensitive to differences in the way a proposition picks out its range. So, tentatively at least, it seems that logically equivalent propositions have the same degree of truthlikess. The proposition that the number of planets is eight for example, should have the same degree of truthlikeness as the proposition that the square of the number of the planets is sixty four.

There is also not a little controversy over the nature of possible worlds. One view — perhaps Leibniz's and more recently David Lewis's — is that worlds are maximal collections of possible things. Another — perhaps the early Wittgenstein's — is that possible worlds are complete possible ways for things to be. On this latter state-conception, a world is a complete distribution of properties, relations and magnitudes over the appropriate kinds of entities. Since invoking "all" properties, relations and so on will certainly land us in paradox, these distributions, or possibilities, are going to have to be relativised to some circumscribed array of properties and relations. Call the complete collection of possibilities, given some array of features, the logical space, and call the array of properties and relations which underly that logical space, the framework of the space.

Familiar logical relations and operations correspond to well-understood set-theoretic relations and operations on ranges. The range of the conjunction of two proposition is the intersection of the ranges of the two conjuncts. Entailment corresponds to the subset relation on ranges. The actual world is a single point in logical space — a complete specification of every matter of fact (with respect to the framework of features) — and a proposition is true if its range contains the actual world, false otherwise. The whole Truth is a true proposition that is also complete: it entails all true propositions. The range of the Truth is none other than the singleton of the actual world. That singleton is the target, the bullseye, the thing at which the most comprehensive inquiry is aiming.

In addition to the set-theoretic structures which underlie the familiar logical relations, the logical space might be structured by similarity or likeness. For example, worlds might be more or less like other worlds. There might be a betweeness relation amongst worlds, or even a fully-fledged distance metric. If that's the case we can start to see how one proposition might be closer to the Truth — the proposition whose range singles out the actual worl — than another. Suppose, for example, that worlds are arranged in similarity spheres nested around the actual world, familiar from the Stalnaker-Lewis approach to counterfactuals. Consider Diagram 5:

Diagram 5: Verisimilitude by similarity circles

The bullseye is the actual world and the small sphere which includes it is T, the Truth. The nested spheres represent likeness to the actual world. A world is less like the actual world the larger the first sphere of which it is a member. Propositions A and B are false, C and D are true. A carves out a class of worlds which are rather close to the actual world — all within spheres two to four — whereas B carves out a class rather far from the actual world — all within spheres five to seven. Intuitively A is closer to the bullseye than is B.

The largest sphere which does not overlap at all with a proposition is plausibly a measure of how close the proposition is to being true. Call that the truth factor. A proposition X is closer to being true than Y if the truth factor of X is included in the truth factor of Y. The truth factor of A, for example, is the smallest non-empty sphere, T itself, whereas the truth factor of B is the fourth sphere, of which T is a proper subset.

If a proposition includes the bullseye then of course it is true simpliciter, it has the maximal truth factor (the empty set). So all true propositions are equally close to being true. But truthlikeness is not just a matter of being close to being true. The tautology, D, C and the Truth itself are equally true, but in that order they increase in their closeness to the whole truth.

Taking a leaf out of Popper's book, Hilpinen argued that closeness to the whole truth is in part a matter of degree of informativeness of a proposition. In the case of the true propositions, this correlates roughly with the smallest sphere which totally includes the proposition. The further out the outermost sphere, the less informative the proposition is, because the larger the area of the logical space which it covers. So, in a way which echoes Popper's account, we could take truthlikeness to be a combination of a truth factor (given by the likeness of that world in the range of a proposition that is closest to the actual world) and a content factor (given by the likeness of that world in the range of a proposition that is furthest from the actual world):

A is closer to the truth than B if and only if A does as well as B on both truth factor and content factor, and better on at least one of those.

Applying Hilpinen's definition we capture two more particular judgements, in addition to those already mentioned, that seem intuitively acceptable: that C is closer to the truth than A, and that D is closer than B. (Note, however, that we have here a partial ordering: A and D, for example, are not ranked.) We can derive from this various apparently desirable features of the relation closer to the truth: for example, that the relation is transitive, asymmetric and irreflexive; that the Truth is closer to the Truth than any other theory; that the tautology is at least as far from the Truth as any other truth; that one cannot make a true theory worse by strengthening it by a truth; that a falsehood is not necessarily improved by adding another falsehood, or even by adding another truth.

But there are also some worrying features here. Hilpinen's account entails that no falsehood is closer to the truth than any truth. So, for example, Newton's theory is deeemed to be no more truthlike, no closer to the whole truth, than the tautology. That's bad.

Characterising Hilpinen's account as a combination of a truth factor and an information factor seems to mask its quite radical departure from Popper's account. The incorporation of similarity spheres signals a fundamental break with the pure content approach, and opens up a range of possible new accounts: what such accounts have in common is that the truthlikeness of a proposition is a non-trivial function of the likeness to the actual world of worlds in the range of the proposition.

There are three main problems for any concrete proposal within the likeness approach. The first concerns an account of likeness between states of affairs - in what does this consist and how can it be analyzed or defined? The second concerns the dependence of the truthlikeness of a proposition on the likeness of worlds in its range to the actual world: what is the correct function? And finally, there is the famous problem of "translation variance" of judgements of likeness and of truthlikeness. This last problem will be taken up in section 5.

3.1 Likeness of worlds in a (ridiculously) simple logical space

One objection to Hilpinen's proposal (like Lewis's proposal for counterfactuals) is that it assumes the similarity relation on worlds as a primitive, there for the taking. At the end of his 1974 paper Tichý not only suggested the use of similarity rankings on worlds, but also provided a ranking in propositional spaces and indicated how to generalize this to more complex cases.Examples and counterexamples in Tichý 1974 are exceedingly simple, utilizing a propositional framework with three primitives — h (for the state hot), r (for rainy) and w (for windy). This framework generates a small logical space of eight possibilities. The sentences of the associated propositional language are taken to express propositions over this logical space.

Tichý took judgements of truthlikeness like the following to be self-evident: Suppose that in fact it is hot, rainy and windy. Then the proposition that it is cold, dry and still (expressed by the sentence ~h&~r&~w) is further from the truth than the proposition that it is cold, rainy and windy (expressed by the sentence ~h&r&w). And the proposition that it is cold, dry and windy (expressed by the sentence ~h&~r&w) is somewhere between the two. These kinds of judgements are taken to be core intuitions which any adequate account of truthlikeness would have to deliver, and which Popper's theory patently can not handle. Unlike Popper, Tichý is not trying to find the missing theoretical bridge to epistemic optimism in a fallibilist philosophy of science. Rather, he takes the intuitive concept of truthlikeness to be as much a standard component of the intellectual armory of the folk as is the concept of truth. Doubtless, like the concept of truth, it needs tidying up and trimming down, but he assumes that it is basically sound, and that the job of the philosopher is to explicate it: that is to say, to give a precise, logically perspicuous, consistent account which captures the core intuitions and excludes core counterintuitions. In the grey areas, where our intuitions are not clear, it is a case of “spoils to the victor” — the best account of the core intuitions can legislate where the intuitions are fuzzy or contradictory.

Corresponding to the eight-members of the logical space generated by distributions of truth values through the three basic conditions, there are eight maximal conjunctions (or constituents):

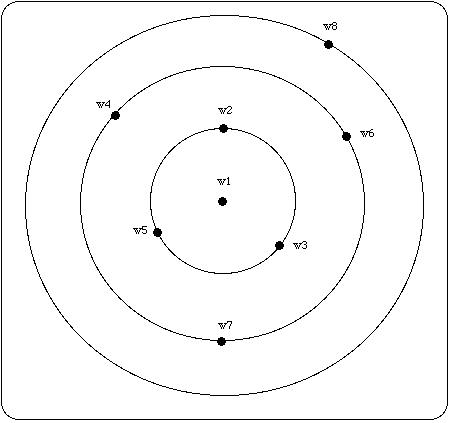

Worlds differ in the distributions of these traits, and a natural, albeit simple, suggestion is to measure the likeness between two worlds by the number of agreements on traits. (This is tantamount to taking distance to be measured by the size of the symmetric difference of generating states. As is well known, this will generate a genuine metric, in particular satisfying the triangular inequality.) If w1 is the actual world this immediately induces a system of nested spheres, but one in which the spheres come with numbers attached:

w1 h&r&w w2 h&r&~w w3 h&~r&w w4 h&~r&~w w5 ~h&r&w w6 ~h&r&~w w7 ~h&~r&w w8 ~h&~r&~w

Diagram 6: Similarity circles for the weather space

Those worlds orbiting on the sphere n are of distance n from the actual world.

3.2 The likeness of a proposition to the truth

Now that we have numbers for distances between worlds, numerical measures of propositional distance can be defined as some function of distances, from the actual world, of worlds in the range of a proposition. But which measure is the right one? Following Hilpinen, we might take the average, or weighted average, of the closest and furthest worlds from the actual world. But now that we have distances associated with all worlds, why take only the extreme worlds into account? Why shouldn't every world in a proposition count towards its degree of likeness to the actual world? One possibility is to take the straight average (or perhaps weighted average) of all distances of worlds from the actual world. This is tantamount to measuring the distance from actuality of the “center of gravity” of the proposition. Another is to take the sum of all the distances of worlds in the range of the proposition. As it happens, these different proposals have been evaluated rather haphazardly, by comparing their consequences with particular intuitive judgements.For example, the straight averaging proposal delivers all of the particular judgements we used above to motivate Hilpinen's proposal, but in conjunction with the simple metric on worlds it delivers the following ordering of propositions:

Table 1: Distances of propositions from truth using straight average

Truth Value Proposition Distance true h&r&w 0 true h&r 0.5 false h&r&~w 1.0 true h 1.3 false h&~r 1.5 false ~h 1.7 false ~h&~r&w 2.0 false ~h&~r 2.5 false ~h&~r&~w 3.0

So far these results look quite pleasing. Propositions are closer to the truth the more they get the basic weather traits right, further away the more mistakes they make. A false proposition may be made either worse or better by strengthening (~w is the same distance from the Truth as ~h; h&r&~w is better than ~w while ~h&~r&~w is worse). A false proposition (like h&r&~w) can be closer to the truth than some true propositions (like h).

Compare this to the results we get by summing distances:

Table 2: distances of propositions from truth using straight sum

Truth Value Proposition Distance true h&r&w 0 true h&r 1 false h&r&~w 1 true h 4 false h&~r 3 false ~h 5 false ~h&~r&w 2 false ~h&~r 5 false ~h&~r&~w 3

The results of summing distances look rather strange by comparison with the results of averaging distances. These judgments may be sufficient to show that averaging is superior to summing, but they are clearly not sufficient to show that averaging is the right procedure. What we need are some straightforward and compelling general desiderata which jointly yield a single correct function. In the absence of such a proof, we can only resort to case by case comparisons. And the average function has not found universal favor on the score of particular judgments either. Notably, there are pairs of true propositions such that the average measure deems the stronger of the two to be the further from the truth. The tautology, for example, is not the true proposition furthest from the truth. Averaging thus violates the Popperian principle of the value of content for truths (Popper 1976).

Table 3: averaging violates the value of content for truths

Truth Value Proposition Distance true h ~r

w

1.4 true h ~r

1.5 true h ~h

1.5

In deciding how to proceed here we confront a methodological problem. The methodology exemplified by Tichý is very much bottom-up. For the purposes of deciding between rival accounts it takes the intuitive data very seriously. Popper (along with Popperians like Miller) take a far more top-down approach. They are deeply suspicious of folk intuitions, and sometimes appear to be in the business of constructing a new concept rather than explicating an existing one. They place enormous weight on certain plausible general principles, largely those that fit in with other principles of their overall theory of science: for example, the principle that strength is a virtue and that the stronger of two true theories (and maybe even of two false theories) is the closer to the truth. A third approach, one which lies between these two extremes, is that of reflective equilibrium. This recognizes the claims of both intuitive judgements on low-level cases, and plausible high-level principles, and enjoins us to bring principle and judgement into equilibrium, possibly by tinkering with both. Neither intuitive low-level judgements nor plausible high-level principles are given advance priority. The protagonist in the truthlikeness debate who has argued most consistently for this approach is Niiniluoto.

How does this impact on the current dispute? Consider a different space of possibilities, generated by a single magnitude like the number of the planets (N). For the sake of the argument let's forget about the recent demotion of Pluto to less than full planetary status, and agree that N is in fact 9 and that the further n is from 9, the further the proposition that N=n from the Truth. Consider three sets of propositions. In the left-hand column we have a sequence of false propositions which, intuitively, decrease in truthlikeness while increasing in strength. In the middle column we have a sequence of corresponding true propositions, in each case the strongest true consequence of its false counterpart on the left (Popper's “truth content”). Again members of this sequence steadily increase in strength. Finally on the right we have another column of falsehoods. These are also steadily increasing in strength, and like the left-hand falsehoods, seem also to be decreasing in truthlikeness.

Table 4

Falsehood (1) Strongest True Consequence Falsehood (2) 11 ≤ N ≤ 20 N=9 or 11 ≤ N ≤ 20 N=10 or 11 ≤ N ≤ 20 12 ≤ N ≤ 20 N=9 or 12 ≤ N ≤ 20 N=10 or 12 ≤ N ≤ 20 …… …… …… 19 ≤ N ≤ 20 N=9 or 19 ≤ N ≤ 20 N=10 or 19 ≤ N ≤ 20 N = 20 N=9 or N = 20 N=10 or N = 20

Judgements about the closeness of the true propositions to the truth may be less clear than are intuitions about their left-hand counterparts. However, it would seem highly incongruous to judge the truths in table 4 to be steadily increasing in truthlikeness, while the falsehoods on the right, minimally different in content, steadily decrease in truthlikeness. So both the bottom-up approach and reflective equilibrium suggest that all three are sequences of steadily increasing strength combined with steadily decreasing truthlikeness. And if that were right, it might be enough to overturn Popper's claim that amongst true theories strength and truthlikeness covary. This removes an objection to averaging distances, but does not settle the issue in its favor, for there may still be other more plausible counterexamples to averaging that we have not considered.

3.3 Generalizing likeness to more complex spaces

Simple propositional examples are all very nice for the purposes of illustration, but what we want is some indication that this simplicity is not crucial to the likeness approach. Can essentially the same idea be extended to arbitrarily complex frameworks? One fruitful way of generalizing the simple idea to complex frameworks involves cutting the associated spaces down into finite chunks. This can be done in various ways, but one promising idea (Tichý, 1974, Niiniluoto 1976) is to make use of a certain kind of normal form — Hintikka's distributive normal forms (Hintikka 1963). Constituents correspond to propositional maximal conjunctions. Hintikka defined what he called constituents, which, like maximal conjunctions, are jointly exhaustive and mutually exclusive. Constituents lay out, in a very perspicuous manner, all the different ways individuals can be related — relative, of course, to some framework of attributes. For example, Every sentence in a first-order language comes with a certain depth — the number of embedded quantifiers required to formulate it. So, for example, (1) is a depth-1 sentence; (2) is depth-2; and (3) is depth-3.

(1) Everyone loves himself.

(2) Everyone loves another.

(3) Everyone who loves another loves the other's lovers.

We could call a proposition depth-d if the shallowest depth at which it can be expressed is d. What Hintikka showed is that every depth-d proposition can be expressed by a disjunction of depth-d constituents. Constituents can be represented as finite tree-structures, the nodes of which are like straightforward conjunctions of atomic states. Consequently, if we can measure distance between such trees we will be well down the path of measuring the truthlikeness of depth-d propositions: it will be some function of the distance of constituents in its normal form from the true depth-d constituent.

This program has proved flexible and fruitful, delivering a wide range of intuitively appealing results in simple first-order cases. Further, the general idea can be extended in a number of different directions: to higher-order languages and to spaces based on functions rather than properties.

4. Are There Hybrid Approaches?

Hilpinen's proposal is typically located within the likeness approach. Interestingly, Hilpinen himself thought of his proposal as a refined and improved articulation of Popper's content approach. Popper's truth factor Hilpinen identified with that world, in the range of a proposition, closest to the actual world. Popper's content or information factor he identified with that world, in the range of a propostion, furthest from the actual world. An improvement in truhtlikeness involves an improvement in either the truth factor or the information factor. His proposal clearly departs from Popper's in as much as it incorporates likeness into both of the determining factors but Hilpinen was also attempting to capture, in some way or other, Popper's penchant for content as well as truth. And his account achieves a good deal of that. In particular his proposal delivers a weak version of the value of content for truths: namely, that of two truths the logically stronger cannot be further from the truth than the logically weaker. It fails, however, to deliver the stonger Popperian content principle for truths: that the logically stronger of two truths is closer to the truth.

At this point it may be worth trying to characterize content and likeness approaches more precisely. Zwart (2001) does just that, and Zwart and Franssen (2007) use a formal characterization of content and likeness approaches to determine whether the best of both approaches might be combined in some kind of happy compromise. That is to say, can any account satisfy both constraints, or is there some kind of radical incompatibility. Their characterization of the content approach is essentially that it encompass the Simple Truth Content account: viz that A is as closee to the truth as B if A entails all of A's truth content, and A is closer to the truth than B just in case A is at least as close as B, and B is not at least as close as A. Their characterization of the likeness approach is basically that it encompass Hilpinen's proposal. They then go on to show that Arrow's famous theorem in social choice theory can be applied to obtain a surprising general result about truthlikeness orderings: that there is a precise sense in which there can be no compromise between the content and likeness approaches, that any apparent compromise effectively capitulates to one paradigm or the other. (Obviously, Hilpinen's apparent compromise capitulates to the likeness approach - given the characterization.)

This theorem represents an interesting new development in the truthlikeness debate. As already noted, much of the debate has been conducted on the battlefield of intuition, with protagonists from different camps firing off cases which appear to refute their opponent's definition while confirming their own. The Zwart-Franssen-Arrow theorem is not only an interesting result in itself, but it represents an innovative and welcome development in the debate, since most of the theorizing has lacked this kind of theoretical generality.

One problem with the Zwart-Franssen-Arrow theorem lies in their characterization of the two approaches. If the Simple Truth Content account is stipulated to be a necessary condition for any content account then while the symmetric difference accounts of Miller and Kuipers are ruled in, Popper's original account is ruled out as an articulation of the content approach. Further, if Hilpinen's condition is stipulated to be a necessary condition for any likeness account then Tichý's averaging account is ruled out. So the characterizations seem to be too narrow. These two characterizations thus rule out what are perhaps the central paradigms of the two approaches.

A more inclusive, and perhaps more accurate, account of the content approach would count in any proposal that delivers the value of content for truths: that, at least, was Popper's litmus test for acceptability and what motivated his original proposal. A more inclusive account of the likeness approach would count in any proposal that makes truthlikeness depend, non-trivially, on a measure or ordering of likeness on worlds. So on this broader characterization, Popper's account would be a content account but not a likeness account; Tichý's account would be a likeness account and not a content account (it violates the value of content for truths); and Hilpinen's account would almost squeak in as both a content account and a likeness account. (Almost because it does not quite deliver the full value of content for truths: it entails that a stronger truth cannot be further from the truth, but not that it must be closer.) Hilpinen's account is thus (almost) a hybrid. Are there others?

As we have seen, one shortcoming which Hilpinen's proposal shares with Popper's original proposal is that no falsehood is deemed closer to the truth than any truth. In the case of Hilpinen's proposal, this defect can be remedied by assuming quantitative distances between worlds, and letting A's distance from the truth be some weighted average of the distance of the closest world in A from the actual world, and the distance of the furthest world in A from the actual world. This quantitative version (call it min-max-average) of Hilpinen's account renders all propositions comparable for truthlikeness, and some falsehoods it deems more truthlike than some truths. But while this version of Hilpinen's proposal falls within the scope of likeness approaches as defined, it is not totally satisfactory from either content or likeness perspectives. Let A be a true proposition with a number of worlds tightly clustered around the actual world a. Let Z be a false proposition with a number of worlds tightly clustered around a world z maximally distant from actuality. A is highly truthlike, and Z highly untruthlike and min-max-average agrees. But now let Z+ be Z plus a, and let A+ be A plus z. Considerations of both continuity and likeness suggest that A+ should be much more truthlike than Z+, but they are deemed equally truthlike by min-max-average. Further, min-max-average deems both A+ and Z+ equal in truthlikeness to the tautology, violating the principle of the value of content for truths.

Part of the problem, from the content perspective, is that the furthest world in a proposition is, as noted above, a very crude estimator of overall content. Niiniluoto suggests a different content measure: the (normalized) sum of the distances of worlds in A from the actual world. As we have seen, sum is not itself a good measure of distance of a proposition from the truth. However formally, sum is a probability measure, and hence a measure of a kind of logical weakness. But sum is also a content-likeness hybrid, rendering a proposition more contentful the closer its worlds are to actuality. Being genuinely sensitive to size, sum is clearly a better measure of logical weakness than the world furthest from actuality. Hence Niiniluoto proposes a weighted average of the closest world (the truth factor) and sum (the information factor). and so min-sum-average ranks the tautology, Z+ and A+ in that order. min-sum-average delivers the value of content for truths: if A is true and is logically stronger than B then both have the same truth factor (0), but since the range of B contains more worlds, its sum will be greater, making it further from the truth. So min-sum-average falls within the content approach on my characterization. On the other hand, min-sum-average seems to fall within the likeness camp, since it deems truthlikeness to be a non-trivial function of the likenesses of worlds, in the range of a proposition, to the actual world.

According to min-sum-average: all propositions are commensurable for truthlikeness; the full principle of the value of content for truths holds provided the content factor gets non-zero weight; the Truth has greater truthlikeness than any other proposition provided all non-actual worlds are some distance from the actual world; some false propositions are closer to the truth than others; the principle of the value of content for falsehoods is appropriatly repudiated, provided the truth factor gets some weight; if A is false, the truth content of A is more truthlike than A itself, again provided the truth factor gets some weight. min-sum-average thus seems like a consistent and somewhat appealing compromise between content and likeness approaches.

This compatibility result may be too swift for the following reason. We have laid down a rather stringent condition on content-based measures (namely, the value of content for truths) but we have stipulated a really rather lax condition for likeness-based measures (namely, that the likeness of a proposition to the truth be some function or other of the likeness of the worlds in the proposition to the actual world). But this latter condition allows any old non-trivial function of likeness to count. For example, summing the distances of worlds from the actual world is a non-trivial function of likeness, but it hardly satisfies basic intuitive constraints on the likeness of a proposition to the truth. So it is quite likely that there are more stringent but plausible contraints on the likeness approach, and those conditions may block the compatibility of likeness and content. So it is still an interesting open question whether there are simple and compelling principles which can narrow down, or even single out, the correct function underlying the notion of the overall likeness of propositions to the truth.

5. Translation Invariance

The single most influential argument against any proposal that utilizes likeness is the charge that it is not translation invariant (Miller 1974a, 1975 a, 1976, and most recently defended, vigorously as usual, in his 2006). Early formulations of the approach (Tichý 1974, 1976) proceeded in terms of syntactic surrogates for their semantic correlates — sentences for propositions, predicates for properties, distributive normal forms for partitions of the logical space, and the like. The question naturally arises, then, whether we obtain the same measures if all the syntactic items are translated into an essentially equivalent language — one capable of expressing the same propositions and properties with a different set of primitive predicates.Take our simple weather-framework above. This trafficks in three primitives — hot, rainy, and windy Suppose, however, that we define the following two new weather conditions:

minnesotan =df hot if and only if rainyNow it appears as though we can describe the same sets of weather states in an h-m-a-ese based on these conditions.arizonan =df hot if and only if windy

h-r-w-ese h-m-a-ese T h&r&w h&m&a A ~h&r&w ~h&~m&~a B ~h&~r&w ~h&m&~a C ~h&~r&~w ~h&m&a

If T is the truth about the weather then theory A, in h-r-w-ese, seems to make just one error concerning the original weather states, while B makes two and C makes three. However, if we express these two theories in h-m-a-ese however, then this is reversed: A appears to make three errors and B still makes two and C makes only one error. But that means the account makes truthlikeness, unlike truth, radically language-relative.

There are two live responses to this criticism. But before detailing them, note a dead one: the similarity theorist cannot object that h-m-a is somehow logically inferior to h-r-w, on the grounds that the primitives of the latter are essentially "biconditional" whereas the primitives of the former are not. This is because there is a perfect symmetry between the two sets of primitives. Starting within h-m-a-ese we can arrive at the original primitives by exactly analogous definitions:

rainy =df hot if and only if minnesotan

windy =df hot if and only if arizonan

Thus if we are going to object to h-m-a-ese it will have to be on other than purely logical grounds.

Firstly, then, the similarity theorist could maintain that certain predicates (presumably “hot”, “rainy” and “windy”) are primitive in some absolute, realist, sense. Such predicates “carve reality at the joints” whereas others (like “minnesotan” and “arizonan”) are gerrymandered affairs. With the demise of predicate nominalism as a viable account of properties and relations this approach is not as unattractive as it might have seemed in the middle of the last century. Realism about universals is certainly on the rise. While this version of realism presupposes a sparse theory of properties — that is to say, it is not the case that to every definable predicate there corresponds a genuine universal — such theories have been championed both by those doing traditional a priori metaphysics of properties (e.g. Bealer 1982) as well as those who favor or more empiricist, scientifically informed approach (e.g. Armstrong 1978, Tooley 1977). According to Armstrong, for example, which predicates pick out genuine universals is a matter for developed science. The primitive predicates of our best fundamental physical theory will give us our best guess at what the genuine universals in nature are. They might be predicates like electron or mass, or more likely something even more abstruse and remote from the phenomena — like the primitives of String Theory.

One apparently cogent objection to this realist solution is that it would render the task of empirically estimating degree of truthlikeness completely hopeless. If we know a priori which primitives should be used in the computation of distances between theories it will be difficult to estimate truthlikeness, but not impossible. For example, we might compute the distance of a theory from the various possibilities for the truth, and then make a weighted average, weighting each possible true theory by its probability on the evidence. That would be the credence-mean estimate of truthlikeness. However, if we don't even know which features should count towards the computation of similarities and distances then it appears that we cannot get off first base.

To see this consider our simple weather frameworks. Suppose that all I learn is that it is rainy. Do I thereby have some grounds for thinking A is closer to the truth than B? I would if I also knew that h-r-w-ese is the language for calculating distances. For then, whatever the truth is, A makes one fewer mistake than B makes. A gets it right on the rain factor, while B doesn't, and they must score the same on the other two factors whatever the truth of the matter. But if we switch to h-m-a-ese then A's epistemic superiority is no longer guaranteed. If, for example, T is the truth then B will be closer to the truth than A. That's because in the h-m-a framework raininess as such doesn't count in favor or against the truthlikeness of a proposition.

However, this objection fails if there can be empirical indicators not just of which atomic states obtain, but also which are the genuine ones, the ones that really do carve reality at the joints. Obviously the framework would have to contain more than just h, m and a. It would have to contain resources for describing the states that indicate whether these were genuine universals. Maybe whether they enter into genuine causal relations will be crucial, for example. Once we can distribute probabilities over the candidates for the real universals, then we can use those probabilities to weight the various possible distances which a hypothesis might be from any given theory.

The second live response is both more modest and more radical. It is more modest in that it is not hostage to the objective priority of a particular conceptual scheme, whether that priority is accessed a priori or a posteriori. It is more radical in that it denies a premise of the invariance argument that at first blush is apparently obvious. It denies the equivalence of the two conceptual schemes. It denies that h&r&w, for example, expresses the very same proposition as h&m&a expresses. If we deny translatability then we can grant the invariance principle, and grant the judgements of distance in both cases, but remain untroubled. There is no contradiction. (Tichý 1978, Oddie 1986a).

At first blush this seems somewhat desperate. Haven't the respective conditions been defined in such a way that they are simple equivalents by fiat? That would, of course, be the case if m and a had been introduced as defined terms into h-r-w. But if that were the intention then the similarity theorist could retort that the calculation of distances should proceed in terms of the primitives, not the introduced terms. However that is not the only way the argument can be read. We are asked to contemplate two partially overlapping sequences of conditions, and two spaces of possibilities generated by those two sequences. We can thus think of each possibility as a point in a simple three dimensional space. These points are ordered triples of 0s and 1s, the nth entry being a if the nth condition is satisfied and 1 if it isn't. Thinking of possibilities in this way, we already have rudimentary geometrical features generated simply by the selection of generating conditions. Points are adjacent if they differ on only one dimension. A path is a sequence of adjacent points. A point q is between two points p and r if q lies on a shortest path from p to r. A region of possibility space is convex if it is closed under the betweeness relation — anything between two points in the region is also in the region.

Evidently we have two spaces of possibilities, S1 and S2, and the question now arises whether a sentence interpreted over one of these spaces expresses the very same thing as any sentence interpreted over the other. Does h&r&w express the same thing as h&m&a? h&r&w expresses (the singleton of) u1 (which is the entity <1,1,1> in S1 or <1,1,1>S1) and h&m&a expresses v1 (the entity <1,1,1>S2). ~h&r&w expresses u2 (<0,1,1>S1), a point adjacent to that expressed by h&r&w. However ~h&~m&~a expresses v8 (<0,0,0>S2), which is not adjacent to v1 (<1,1,1>S2). So now we can construct a simple proof that the two sentences do not express the same thing.

u1 is adjacent to u2thereforev1 is not adjacent to v8

either u1 is not identical to v1 or u2 is not identical v8.therefore

Either h&r&w and h&m&a do not express the same thing, or~h&r&w and ~h&~m&~a do not express the same thing.

Thus at least one of the two required intertranslatability claims fails, and h-r-w-ese is not intertranslatable with h-m-a-ese. The important point here is that a space of possibilities already comes with a structure and the points in such a space cannot be individuated without reference to rest of the space and its structure. The identity of a possibility is bound up with its geometrical relations to other possibilities. Different relations, different possibilities.

This idea meshes well with recent work on conceptual spaces in Gärdenfors [2000]. Gärdenfors is concerned both with the semantics and the nature of genuine properties, and his bold and simple hypothesis is that properties carve out convex regions of an n-dimensional quality space. He supports this hypothesis with an impressive array of logical, linguistic and empirical data. (Looking back at our little spaces above it is not hard to see that the convex regions are those that correspond to the generating (or atomic) conditions and conjunctions of those. See Oddie 1987a and Burger and Heidema 1994.) While Gärdenfors is dealing with properties it is not hard to see that similar considerations apply to propositions, since propositions can be regarded as 0-ary properties.

Ultimately, however, this response may seem less than entirely satisfactory by itself. If the choice of a conceptual space is merely a matter of taste then we may be forced to embrace a radical kind of incommensurability. Those who talk h-r-w-ese and conjecture ~h&r&w on the basis of the available evidence will be close to the truth. Those who talk h-m-a-ese while exposed to the “same” circumstances would presumably conjecture ~h&~m&~a on the basis of the “same” evidence (or the corresponding evidence that they gather). If in fact h&r&w is the truth (in h-r-w-ese) then the h-r-w weather researchers will be close to the truth. But the h-m-a researchers will be very far from the truth. This may not be an explicit contradiction, but it should be worrying. Realists started out with the ambition of defending a concept of truthlikeness which would enable them to embrace both fallibilism and optimism. But what they seem to have ended up with here is something that suggests a rather unpalatable incommensurability of competing conceptual frameworks. So it seems that the realist will probably need to affirm that some conceptual schemes really are better than others. Some do “carve reality at the joints” and others don't. But is that something the realist should be reluctant to affirm?

Bibliography

- Aronson, J., Harre, R., and Way, E.C., 1995, Realism Rescued: How Scientific Progress is Possible, Chicago: Open Court.

- Aronson, J., 1997, "Truth, Verisimilitude, and Natural Kinds", Philosophical Papers, 26: 71-104.

- Armstrong, D. M., 1983, What is a Law of Nature?, Cambridge: Cambridge University Press.

- Barnes, E., 1990, "The Language Dependence of Accuracy", Synthese, 84: 54-95.

- –––, 1991, "Beyond Verisimilitude: A Linguistically Invariant Basis for Scientific Progress", Synthese, 88: 309-339.

- –––, 1995, "Truthlikeness, Translation, and Approximate Causal Explanation", Philosophy of Science, 62/2: 15-226.

- Bealer, G., 1982, Quality and Concept, Oxford: Clarendon.

- Brink, C., & Heidema, J., 1987, "A verisimilar ordering of theories phrased in a propositional language", The British Journal for the Philosophy of Science, 38: 533-549.

- Britton, T., 2004, "The problem of verisimilitude and counting partially identical properties", Synthese, 141: 77–95.

- Britz, K., and Brink, C., 1995, "Computing verisimilitude", Notre Dame Journal of Formal Logic, 36/2: 30-43.

- Burger, I. C., and Heidema, J., 1994, "Comparing theories by their positive and negative contents", British Journal for the Philosophy of Science, 45: 605–630.

- Burger, I. C., and Heidema, J., 2005, "For better, for worse: comparative orderings on states and theories" in R. Festa, A. Aliseda, and J. Peijnenburg (eds.), Confirmation, Empirical Progress, and Truth Approximation, Amsterdam, New York: Rodopi, pp. 459–488.

- Cohen, L.J., 1980, "What has science to do with truth?", Synthese, 45: 489-510.

- –––, 1987, "Verisimilitude and legisimilitude", in Kuipers 1987a, pp. 129-45.

- Gerla, G., 1992, "Distances, diameters and verisimilitude of theories", Archive for mathematical Logic, 31/6: 407-14.

- Gärdenfors, P., 2000, Conceptual Spaces, Cambridge: MIT Press.

- Harris, J., 1974, "Popper's definition of ‘Verisimilitude’", The British Journal for the Philosophy of Science, 25: 160-166.

- Hilpinen, R., 1976, "Approximate truth and truthlikeness" in Przelecki, et al. (eds.), Formal Methods in the Methodology of the Empirical Sciences, Dordrecht: Reidel, 19-42

- Hintikka, J., 1963, "Distributive normal forms in first-order logic" in Formal Systems and Recursive Functions (Proceedings of the Eighth Logic Colloquium), J.N. Crossley and M.A.E. Dummett (eds.), Amsterdam: North-Holland, pp. 47-90.

- Kieseppa, I.A., 1996, Truthlikeness for Multidimensional, Quantitative Cognitive Problems, Dordrecht: Kluwer.

- –––, 1996, "Truthlikeness for Hypotheses Expressed in Terms of n Quantitative Variables", Journal of Philosophical Logic, 25: 109-134.

- –––, 1996, "On the Aim of the Theory of Verisimilitude", Synthese, 107: 421-438

- Kuipers, T. A. F. (ed.), 1987a, What is closer-to-the-truth? A parade of approaches to truthlikeness (Poznan Studies in the Philosophy of the Sciences and the Humanities, Volume 10), Amsterdam: Rodopi.

- –––, 1987b, "A structuralist approach to truthlikeness", in Kuipers 1987a, pp. 79-99.

- –––, 1987c, "Truthlikeness of stratified theories", in Kuipers 1987a, pp. 177-186.

- –––, 1992, "Naive and refined truth approximation", Synthese, 93: 299-341.

- Miller, D., 1972, "The Truth-likeness of Truthlikeness", Analysis, 33/2: 50-55.

- –––, 1974a, "Popper's Qualitative Theory of Verisimilitude", The British Journal for the Philosophy of Science, 25: 166-177.

- –––, 1974b, "On the Comparison of False Theories by Their Bases", The British Journal for the Philosophy of Science, 25/2: 178-188

- –––, 1975a, "The Accuracy of Predictions", Synthese 30/1-2: 159-191.

- –––, 1975b, "The Accuracy of Predictions: A Reply", Synthese, 30/1-2: 207-21.

- –––, 1976, "Verisimilitude Redeflated", The British Journal for the Philosophy of Science, 27/4: 363-381.

- –––, 1977, "Bunge's Theory of Partial Truth Is No Such Thing", Philosophical Studies, 31/2: 147-150.

- –––, 1978a, "The Distance Between Constituents", Synthese, 38/2: 197-212.

- –––, 1978b, "On Distance from the Truth as a True Distance", in Hintikka et al. (eds.), Essays on Mathematical and Philosophical Logic, Dordrecht: Reidel, pp. 415-435.

- –––, 1982, "Truth, Truthlikeness, Approximate Truth", Fundamenta Scientiae, 3/1: 93-101

- –––, 1984a, "Impartial Truth", in Skala et al. (eds.), Aspects of Vagueness, Dordrecht: Reidel, pp. 75-90.

- –––, 1984b, "A Geometry of Logic", in Skala et al. (eds.), Aspects of Vagueness, Dordrecht: Reidel, pp. 91-104.

- –––, 1990, "Some Logical Mensuration", The British Journal for the Philosophy of Science, 41: 281-90.

- –––, 1994, Critical Rationalism: A Restatement and Defence, Chicago: Open Court.

- –––, 2006, Out Of Error: Further Essays on Critical Rationalism, Aldershot: Ashgate.

- Newton-Smith, W.H., 1981, The Rationality of Science, Boston: Routledge & Kegan Paul.

- Niiniluoto, I., 1977, "On the Truthlikeness of Generalizations’, in Butts et al. (eds.), Basic Problems in Methodology and Linguistics, Dordrecht: Reidel, pp. 121-147.

- –––, 1978a, "Truthlikeness: Comments on Recent Discussion", Synthese, 38: 281-329.

- –––, 1978b, "Verisimilitude, Theory-Change, and Scientific Progress", in I. Niiniluoto et al. (eds.), The Logic and Epistemology of Scientific Change, Acta Philosophica Fennica,, 30/2-4, Amsterdam: North-Holland, pp. 243-264.

- –––, 1979a, "Truthlikeness in First-Order Languages", in I. Niiniluoto et al. (eds.), Essays on Mathematical and Philosophical Logic, Dordrecht: Reidel, pp. 437-458.

- –––, 1979b, "Degrees of Truthlikeness: From Singular Sentences to Generalizations", The British Journal for the Philosophy of Science, 30: 371-376.

- –––, 1982a, "Scientific Progress", Synthese, 45/3: 427-462.

- –––, 1982b, "What Shall We Do With Verisimilitude?", Philosophy of Science, 49: 181-197.

- –––, 1983, "Verisimilitude vs. Legisimilitude", Studia Logica, XLII/2-3: 315-329.

- –––, 1986, "Truthlikeness and Bayesian Estimation", Synthese, 67: 321-346.

- –––, 1987a, "How to Define Verisimilitude", in Kuipers 1987a, pp. 11-23.

- –––, 1987b, "Verisimilitude with Indefinite Truth", in Kuipers 1987a, pp. 187-195.

- –––, 1987c, Truthlikeness, Dordrecht: Reidel.

- –––. "Reference Invariance and Truthlikeness", in Philosophy of Science 64 (1997), pp. 546-554.

- –––, 1998, "Survey Article: Verisimilitude: The Third Period", in The British Journal for the Philosophy of Science, 49: 1-29.

- –––, 1999, Critical Scientific Realism, Oxford: Clarendon.

- Oddie, G., 1978, "Verisimilitude and distance in logical space", Acta Philosophica Fennica, 30/2-4: 227-243.

- –––, 1981, "Verisimilitude reviewed", The British Journal for the Philosophy of Science, 32: 237-65.

- –––, 1982, "Cohen on verisimilitude and natural necessity", Synthese, 51: 355-79.

- –––, 1986a, Likeness to Truth, (Western Ontario Series in Philosophy of Science), Dordrecht: Reidel.

- –––, 1986b, "The poverty of the Popperian program for truthlikeness", Philosophy of Science, 53/2: 163-78.

- –––, 1987a, "The picture theory of truthlikeness", in Kuipers 1987a, pp. 25-46.

- –––. 1987b, "Truthlikeness and the convexity of propositions" in What is Closer-to-the-Truth?, T. Kuipers (ed.), Amsterdam: Rodopi, pp. 197-217.

- –––, 1990, "Verisimilitude by power relations", British Journal for the Philosophy of Science, 41: 129-35.

- –––, 1997a, "Truthlikeness" in D. Borchert (ed.), The Encyclopedia of Philosophy Supplement, New York: Macmillan, pp. 574-6.

- –––. 1997b, "Conditionalization, cogency and cognitive value" The British Journal for the Philosophy of Science, 48: 533-41.

- –––, 2001, "Truth, Verification, Confirmation, Verisimilitude", in N.J. Smelser et al. (eds.), International Encyclopedia of the Social and Behavioral Sciences, Oxford: Elselvier.

- –––, 2007, "Truthlikeness and Value", in Festschrift for Illkka Niiniluoto, College Publications: London, forthcoming.

- Pearce, D., 1983, "Truthlikeness and translation: a comment on Oddie", The British Journal for the Philosophy of Science, 34: 380-5.

- Popper, K. R., 1963, Conjectures and Refutations, London: Routledge.

- –––, 1976, "A note on verisimilitude", The British Journal for the Philosophy of Science, 27/2: 147-159.

- Psillos, S., 1999, Scientific Realism: How Science Tracks Truth, London: Routledge.

- Rosencrantz, R., 1975, "Truthlikeness: comments on David Miller", Synthese, 30/1-2: 193-7.

- Ryan, M. and P.Y. Schobens, 1995, "Belief revision and verisimilitude", Notre Dame Journal of Formal Logic, 36: 15-29.

- Schurz, G., and Weingartner, P., 1987, "Verisimilitude defined by relevant consequence-elements", in Kuipers 1987a, pp. 47-77.

- Tarski, A., 1969, "The concept of truth in formalized languages" in J. Woodger (ed.), Logic, Semantics, and Metamathematics, Oxford: Clarendon.

- Tichý, P., 1974, "On Popper's definitions of verisimilitude", The British Journal for the Philosophy of Science, 25: 155-160.

- –––, 1976, "Verisimilitude Redefined", The British Journal for the Philosophy of Science, 27: 25-42.

- –––, 1978, "Verisimilitude Revisited" Synthese, 38: 175-196.

- Tooley, M., 1977, "The Nature of Laws", Canadian Journal of Philosophy, 7: 667-698.

- Urbach, P., 1983, "Intimations of similarity: the shaky basis of verisimilitude", The British Journal for the Philosophy of Science, 34: 166-75.

- Vetter, H., 1977, "A new concept of verisimilitude", Theory and Decision, 8: 369-75.

- Volpe, G., 1995, "A semantic approach to comparative verisimilitude", The British Journal for the Philosophy of Science, 46: 563-82.

- Weston, T., 1992, "Approximate truth and scientific realism", Philosophy of Science, 59: 53-74.

- Zamora Bonilla, J. P., 1992, "Truthlikeness Without Truth: A Methodological Approach", Synthese, 93: 343–72.

- –––, 1996, "Verisimilitude, Structuralism and Scientific Progress", Erkenntnis, 44: 25–47.

- –––, 2000, "Truthlikeness, Rationality and Scientific Method", Synthese, 122: 321-335.

- –––, 2002, "Verisimilitude and the Dynamics of Scientific Research Programmes", Journal for the General Philosophy of Science, 33: 349-368

- Zwart, S. D., 2001, Refined Verisimilitude, Dordrecht: Kluwer.

- Zwart, S. D., and M. Franssen, 2007, "An impossibility theorem for verisimilitude", Synthese, forthcoming in print.