Dynamic Choice

Sometimes a series of choices do not serve one's concerns well even though each choice in the series seems perfectly well-suited to serving one's concerns. In such cases, one has a dynamic choice problem. Otherwise put, one has a problem related to the fact that one's choices are spread out over time. There is a growing philosophical literature, which crosses over into psychology and economics, on the obstacles to effective dynamic choice. This literature examines the challenging choice situations and problematic preference structures that can prompt dynamic choice problems. It also proposes solutions to such problems. Increasingly, familiar but potentially puzzling phenomena — including, for example, self-destructive addictive behavior and dangerous environmental destruction — have been illuminated by dynamic choice theory. This suggests that the philosophical and practical significance of dynamic choice theory is quite broad.

- 1. Challenging Choice Situations, Problematic Preference Structures, and Dynamic Choice Problems

- 2. Solving Dynamic Choice Problems

- 3. Some Familiar Phenomena Illuminated by Dynamic Choice Theory

- 4. Concluding Remarks

- Bibliography

- Other Internet Resources

- Related Entries

1. Challenging Choice Situations, Problematic Preference Structures, and Dynamic Choice Problems

Effective choice over time can be extremely difficult given certain challenging choice situations or problematic preference structures, such as the ones described below. As will become apparent, these choice situations or preference structures can prompt a series of decisions that serve one's large-scale, ongoing concerns very badly. (Note that, as is standard in dynamic choice theory, the discussion in this entry leaves room for non-selfish preferences and concerns; it thus leaves room for the possibility that one can be determined to serve one's preferences and concerns as well as possible without being an egoist.)

1.1 Incommensurable Alternatives

Let us first consider situations that involve choosing between incommensurable alternatives.

According to the standard conception of incommensurability explored extensively by philosophers such as Joseph Raz and John Broome (see, for example, Raz 1986 and Broome 2000), two alternatives are incommensurable if neither alternative is better than the other, nor are the two alternatives are equally good.

It might seem as though the idea of incommensurable alternatives does not really make sense. For if the value of an alternative (to a particular agent) is neither higher nor lower than the value of another alternative, then the values of the two alternatives must, it seems, be equal. But this assumes that there is a common measure that one can use to express and rank the value of every alternative; and, if there are incommensurable alternatives, then this assumption is mistaken.

But is there any reason to believe that there are incommensurable alternatives? Well, if all alternatives were commensurable, then whenever one faced two alternatives neither of which was better than the other, slightly improving one of the alternatives would, it seems, ‘break the tie’ and render one alternative, namely the improved alternative, superior. But there seem to be cases in which there are two alternatives such that (i) neither alternative is better than the other and (ii) this feature is not changed by slightly improving one of the alternatives. Consider, for example, the following case: For Kay, neither of the following alternatives is better than the other:

(A1) going on a six-day beach vacation with her children

(A2) taking a two-month oil-painting course.

Furthermore, although the alternative

(A1+) going on a seven-day beach vacation with her children

is a slight improvement on A1, A1+ is not better than A2. This scenario seems possible, and if it is, then we have a case of incommensurable alternatives. For, in this case, A1 is not better than A2, A2 is not better than A1, and yet A1 and A2 are not equally good. If A1 and A2 were equally good, then an improvement on A1, such as A1+, would be better than A2. But, for Kay, A1+ is not better than A2.

Things are complicated somewhat if one allows that two alternatives can fail to be precisely comparable, but can still be roughly evaluated in terms of a common measure. Given this possibility, at least some of the cases that are standardly classified as cases of incommensurability are cases of imprecise comparability. For the purposes of this discussion, this complication can be put aside, since the dynamic choice problem that will be discussed in relation to incommensurability also applies to cases of imprecise comparability, and so cases of the latter sort do not need to be carefully separated out.

Although there is still some controversy concerning the possibility of incommensurable alternatives (compare, for example, Raz 1997 and Regan 1997), there is widespread agreement that we often treat alternatives as incommensurable. Practically speaking, determining the value of two very different alternatives in terms of a common measure, even if this is possible, may be too taxing. It is thus often natural to treat two alternatives as though they are neither equal nor one better than the other.

The existence or appearance of incommensurable alternatives can give rise to dynamic choice problems. Consider Abraham's case, as described by John Broome in his work on incommensurability:

God tells Abraham to take his son Isaac to the mountain, and there sacrifice him. Abraham has to decide whether or not to obey. Let us assume this is one of those choices where the alternatives are incommensur[able]. The option of obeying will show submission to God, but the option of disobeying will save Isaac's life. Submitting to God and saving the life of one's son are such different values that they cannot be weighed determinately against each other; that is the assumption. Neither option is better than the other, yet we also cannot say that they are equally good. (Broome 2001, 114)

Given that the options of submitting to God and saving Isaac are incommensurable (and even if they were only incommensurable as far as a reasonable person could tell), Abraham's deciding to submit to God seems rationally permissible. So it is easy to see how Abraham's situation might prompt him to set out for the mountain in order to sacrifice Isaac. But it is also easy to see how, once at the foot of the mountain, Abraham might decide to turn back. For, even though, as Broome puts it, “turning back at the foot of the mountain is definitely worse than never having set out at all” since “trust between father and son [has already been] badly damaged” (2001, 115), the option of saving Isaac by turning back and the option of submitting to God and sacrificing Isaac may be incommensurable. This becomes apparent if one recalls Kay's case and labels Abraham's above-mentioned options as follows:

(B1) saving Isaac by turning back at the foot of the mountain

(B1+) saving Isaac by refusing to set out for the mountain

(B2) submitting to God and sacrificing Isaac.

Even though B1+ is better than B1, both B1+ and B1 may be incommensurable with B2. But if B1 is incommensurable with B2, then Abraham could, once at the foot of the mountain, easily decide to opt for B1 over B2. Given that B1 is worse than B1+, Abraham could thus easily end up with an outcome that is worse than another that was available to him, even if each of his choices makes sense given the value of the alternatives he faces.

The moral, in general terms, is that in cases of incommensurability (or cases in which it is tempting to treat two alternatives as incommensurable), decisions that seem individually defensible can, when combined, result in a series of decisions that fit together very poorly relative to the agent's large-scale, ongoing concerns.

1.2 Present-Biased Preferences

Another source of dynamic choice problems is present-biased preferences.

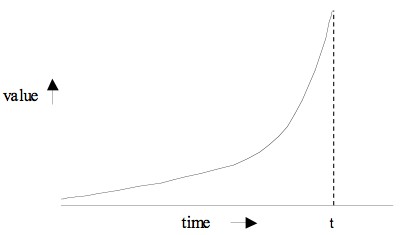

Like other animals, humans give more weight to present satisfaction than to future satisfaction. In other words, we discount future utility. Insofar as one discounts future utility, one prefers, other things equal, to get a reward sooner rather than later; relatedly, the closer one gets to a future reward, the more the reward is valued. If we map the value (to a particular agent) of a given future reward as a function of time, we get a discount curve, such as in Figure 1:

Figure 1. The discounted value of a reward gradually increases as t, the time at which the reward will be available, approaches.

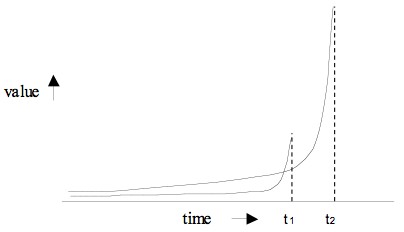

Research in experimental psychology (see, for example, Kirby & Herrnstein 1995, Millar & Navarick 1984, Solnick et al. 1980, and Ainslie 2001) suggests that, given how animals, including humans, discount future utility, there are plenty of cases in which the discount curves from two rewards, one a small reward and the other a larger later reward, cross, as in Figure 2:

Figure 2. Two crossing discount curves, one tracking the discounted value of a small reward that will be available at t1 and the other tracking the discounted value of a large reward that will be available at t2.

In such cases, the agent's discounting of future utility induces a preference reversal with respect to the two possible rewards. When neither reward is imminent, before the discount curves cross, the agent consistently prefers the larger later reward over the smaller earlier reward. But when the opportunity to accept the small reward is sufficiently close, the discounted value of the small reward catches up with and then overtakes the discounted value of the larger later reward. As the discount curves cross, the agent's preferences reverse and she prefers the small reward over the larger later reward.

Discounting-induced preference reversals make consistent and efficient choice over time a challenge. An agent subject to discounting-induced preference reversals can easily find herself performing a series of actions she planned against and will soon regret. Consider the agent who wants to save for a decent retirement but, as each opportunity to save approaches, prefers to spend her potential retirement contribution on just one more trivial indulgence before finally tightening her belt for the sake of the future satisfaction she feels is essential to her well-being. Though this agent consistently plans to save for her retirement, her plans can be consistently thwarted by her discounting-induced preference reversals. Her life may thus end up looking very different from the sort of life she wanted.

1.3 Intransitive Preferences

An agent's preference structure need not be changing over time for it to prompt dynamic choice problems. Such problems can also be prompted by preferences that are stable but intransitive.

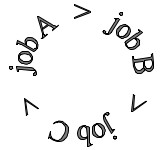

One's preferences count as transitive if they satisfy the following condition: for all x, y, and z, if one prefers x to y, and y to z, then one also prefers x to z. If one's preferences over a set of options do not satisfy this condition, then these preferences count as intransitive. When one's preferences over a set of options are intransitive, then one cannot rank the options from most preferred to least preferred. This holds even if one's preferences over the options are complete, in the sense that all the options are ranked with respect to one another. Suppose, for example, that one prefers job A to job B, job B to job C, but job C to job A. In this case, one's complete preferences over the set {job A, job B, job C} form a preference loop, which can be represented as follows:

where x > y is to be read as x is preferred to y.

Could an agent really have intransitive preferences? Studies in experimental economics (see Tversky 1969, and Manzini & Mariotti 2002, for some examples) suggest that intransitive preferences are quite common. Consideration of the following situation might help make it clear how intransitive preferences can arise (whether or not they are rational). Suppose Jay can accept one of three jobs: job A is very stimulating but low-paying; job B is somewhat stimulating and pays decently; job C is not stimulating but pays very well. Given this situation, one can imagine Jay having the following preferences: He prefers job A over job B because the difference between having a low-paying job and a decently-paying job is not significant enough to make Jay want to pass up a very stimulating job. Similarly, he prefers job B over job C because the difference between having a decently-paying job and a high-paying job is not significant enough to make Jay want to pass up a stimulating job. But he prefers job C over job A because the difference between having a high-paying job and a low-paying job is significant enough to make Jay want to pass up even a very stimulating job.

Given the famous “money pump argument,” which is suggested by Frank Ramsey's reasoning concerning dutch books (1926) and is developed by Donald Davidson, J. McKinsey, and Patrick Suppes (1955), it is clear that intransitive preferences can be problematic. In particular, they can prompt an agent to accept a series of trade offers that leaves him worse off, relative to his preferences, than when he started. To see this, suppose that Alex has the following intransitive preferences: he prefers owning a computer of type A to owning a computer of type B, owning a computer of type B to owning a computer of type C, and owning a computer of type C to owning a computer of type A. Suppose also that Alex owns a computer of type C and a hundred dollars in spending money. Suppose finally that, given his preferences between different computer types, Alex prefers (i) owning a computer of type B and one less dollar of spending money over owning a computer of type C, (ii) owning a computer of type A and one less dollar of spending money over owning a computer of type B, and (iii) owning a computer of type C and one less dollar of spending money over owning a computer of type A. Then a series of unanticipated trade opportunities can spell trouble for Alex. In particular, given the opportunity to trade his current (type C) computer and a dollar for a computer of type B, Alex's preferences will prompt him to make the trade. Given the further opportunity to trade his current (type B) computer and a dollar for a computer of type A, Alex's preferences will prompt him to trade again. And given the opportunity to trade his current (type A) computer and a dollar for a computer of type C, Alex's preferences will prompt him to make a third trade. But this series of trades leaves Alex with the type of computer he started off with and only 97 dollars. And, given that unexpected trading opportunities may keep popping up, Alex's situation may continue to deteriorate. Even though he values his spending money, his preferences make him susceptible to being used as a ‘money pump.’

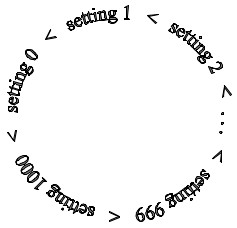

Even if he does not serve as a money pump, an agent with intransitive preferences can get into a great deal of trouble. To see this, consider Warren Quinn's “puzzle of the self-torturer” (1993): Suppose someone — who, for reasons that will become apparent, Quinn calls the self-torturer — has a special electric device attached to him. The device has 1001 settings: 0, 1, 2, 3, …, 1000 and works as follows: moving up a setting raises, by a tiny increment, the amount of electric current applied to the self-torturer's body. The increments in current are so small that the self-torturer cannot tell the difference between adjacent settings. He can, however, tell the difference between settings that are far apart. And, in fact, there are settings at which the self-torturer would experience excruciating pain. Once a week, the self-torturer can compare all the different settings. He must then go back to the setting he was at and decide if he wants to move up a setting. If he does so, he gets $10,000, but he can never permanently return to a lower setting. Like most of us, the self-torturer would like to increase his fortune but also cares about feeling well. Since the self-torturer cannot feel any difference in comfort between adjacent settings but gets $10,000 at each advance, he prefers, for any two consecutive settings s and s+1, stopping at s+1 to stopping at s. But, since he does not want to live in excruciating pain, even for a great fortune, he also prefers stopping at a low setting, such as 0, over stopping at a high setting, such as 1000.

Given his preferences, the self-torturer cannot rank the setting options he faces from most preferred to least preferred. More specifically, his preferences incorporate the following preference loop:

Relatedly, the self-torturer's preferences over the available setting options are intransitive. If his preferences were transitive, then, given that he prefers setting 2 to setting 1 and setting 1 to setting 0, he would prefer setting 2 to setting 0. Given that he also prefers setting 3 to setting 2, he would (assuming transitivity) prefer setting 3 to setting 0. Given that he also prefers setting 4 to setting 3, he would (assuming transitivity) prefer setting 4 to setting 0. Continuing with this line of reasoning leads to the conclusion that he would, if his preferences were transitive, prefer setting 1000 to setting 0. Since he does not prefer setting 1000 to setting 0, his preferences are intransitive. And this intransitivity can lead the self-torturer down a terrible path. In particular, if, each week, the self-torturer follows his preference over the pair of settings he must choose between, he will end up in a situation that he finds completely unacceptable. This is quite disturbing, particularly once one realizes that, although the situation of the self-torturer is pure science fiction, the self-torturer is not really alone in his predicament. As Quinn stresses, “most of us are like him in one way or another. [For example, most of us] like to eat but also care about our appearance. Just one more bite will give us pleasure and won't make us look fatter; but very many bites will” (Quinn 1993, 199).

Given the money pump argument and the puzzle of the self-torturer, we can, it seems, conclude that although intransitive preferences are sometimes understandable, acting on them can be far from sensible.

1.4 Autonomous Benefit Cases

The discussions in the preceding two sections suggest that the task of serving one's concerns well might be promoted by the ability to choose counter-preferentially. This point is reinforced by the possibility of autonomous benefit cases.

In autonomous benefit cases, one benefits from forming a certain intention but not from carrying out the associated action. The autonomous benefit cases that have figured most prominently in the literature on dynamic choice are those in which carrying out the action associated with the beneficial intention is detrimental rather than just unrewarding. Among the most famous autonomous benefit cases is Gregory Kavka's “toxin puzzle” (1983). In Kavka's invented case,

an eccentric billionaire…places before you a vial of toxin…[and provides you with the following information:] If you drink [the toxin], [it] will make you painfully ill for a day, but will not threaten your life or have any lasting effects…. The billionaire will pay you one million dollars tomorrow morning if, at midnight tonight, you intend to drink the toxin tomorrow afternoon…. You need not drink the toxin to receive the money; in fact, the money will already be in your bank account hours before the time for drinking it arrives, if you succeed…. [The] arrangement of…external incentives is ruled out, as are such alternative gimmicks as hiring a hypnotist to implant the intention… (Kavka 1983, 33-4)

Part of what is interesting about this case is that, even though most people would gladly drink the toxin for a million dollars, getting the million dollars is not that easy. This is because one does not get the million dollars for drinking the toxin. Indeed, one does not get anything but a day of illness for drinking the toxin. As Kavka explains, by the time the toxin is to be consumed, one either already has the million in one's account or not; and drinking the toxin will not get one any (additional) funds. Assuming one has no desire to be ill for nothing, drinking the toxin seems to involve acting counter-preferentially — and this is, if not impossible, at least no easy feat. So, given a clear understanding of the situation, one is likely to find it difficult, if not impossible, to form the intention to drink the toxin. Presumably, one cannot form the intention to drink the toxin if one is confident that one will not drink it. If only one could somehow rely on the cooperation of one's future self, one could then genuinely form the intention to drink the toxin and thus get the million — a wonderful result from the perspective of both one's current and one's future self. But, alas, one's future self will, it seems, have no reason to drink the toxin when the time for doing so arrives.

Here again we have a situation in which doing well by oneself is not easy.

2. Solving Dynamic Choice Problems

Given how much trouble dynamic choice problems can cause, it is natural to wonder if and how they can be solved. Various solutions of varying scope have been proposed in the literature on dynamic choice. The first three subsections that follow focus on ideas regarding the practical issue of dealing with dynamic choice problems. The fourth subsection focuses on attempts at resolving the theoretical puzzles concerning rational choice raised by various dynamic choice problems.

2.1 Rational Irrationality

Two strategies that we can sometimes use to solve (in the sense of practically deal with) dynamic choice problems are suggested in Kavka's description of the toxin puzzle. One strategy is to use gimmicks that cause one to reason or choose in a way that does not accord with one's preferences. The other strategy involves the arrangement of external incentives. Although such maneuvers are ruled out in Kavka's case, they can prove useful in less restrictive cases. This subsection considers the former strategy and the next subsection considers the latter strategy.

If one accepts the common assumption that causing oneself to reason or choose in a way that does not accord with one's preferences involves rendering oneself irrational, the former strategy can be thought of as aiming at rationally-induced irrationality. A fanciful but clear illustration of this strategy is presented in Derek Parfit's work (1984). In Parfit's example (which is labeled Schelling's Answer to Armed Robbery because it draws on Thomas Schelling's view that “it is not a universal advantage in situations of conflict to be inalienably and manifestly rational in decision and motivation” (Schelling 1960, 18)), a robber breaks into someone's house and orders the owner, call him Moe, to open the safe in which he hoards his gold. The robber threatens to shoot Moe's children unless Moe complies. But Moe realizes that both he and his children will probably be shot even if he complies, since the robber will want to get rid of them so that they cannot record his getaway car information and get it to the police (who will be arriving from the nearest town in about 15 minutes in response to Moe's call, which was prompted by the first signs of the break-in). Fortunately, Moe has a special drug at hand that, if consumed, causes one to be irrational for a brief period. Recognizing that this drug is his only real hope, Moe consumes the drug and immediately loses his wits. He begins “reeling about the room” saying things like “Go ahead. I love my children. So please kill them” (Parfit 1984, 13). Given Moe's current state, the robber cannot do anything that will induce Moe to open the safe. There is no point killing Moe or his children. The only sensible thing to do now is to hurry off before the police arrive.

Given that consuming irrationality drugs and even hiring hypnotists are normally not feasible solutions to our dynamic choice problems, the possibility of rationally inducing irrationality may seem practically irrelevant. But it may be that we often benefit from the non-conscious employment of what is more or less a version of this strategy. We sometimes, for example, engage in self-deception or indulge irrational fears or superstitions when it is convenient to do so. As such, many of us might, in toxin-type cases, be naturally prone to dwell on and indulge superstitious fears, like the fear that one will somehow be jinxed if one manages to get the million dollars but then does not drink the toxin. Given this fear, one might be quite confident that one will drink the toxin if one gets the million; and so it might be quite easy for one to form the intention to drink the toxin. Although this is not a solution to the toxin puzzle that one can consciously plan on using (nor is it one that resolves the theoretical issues raised by the case), it may nonetheless often help us effectively cope with toxin-type cases. (For a clear and compact discussion concerning self-deception, “motivationally biased belief,” and “motivated irrationality” more generally, see, for example, Mele 2004.)

2.2 The Arrangement of External Incentives

The other above-mentioned strategy that is often useful for dealing with certain dynamic choice problems is the arrangement of external incentives that make it worthwhile for one's future self to cooperate with one's current plans. This strategy can be particularly useful in dealing with discounting-induced preference reversals. Consider again the agent who wants to save for a decent retirement but, as each opportunity to save approaches, prefers to spend her potential retirement contribution on just one more trivial indulgence before finally tightening her belt for the sake of the future satisfaction she feels is essential to her well-being. If this agent's plans are consistently thwarted by her discounting-induced preference reversals, she might come to the conclusion that she will never manage to save for a decent retirement if she doesn't supplement her plans with incentives that will prevent the preference reversals that are causing her so much trouble. If she is lucky, she may find an existing precommitment device that she can take advantage of. Suppose, for example, that she can sign up for a program at work that, starting in a month, automatically deposits a portion of her pay into a retirement fund. If she cannot remove deposited funds without a significant penalty, and if she must provide a month's notice to discontinue her participation in the program, signing up for the program might change the cost-and-reward structure of spending her potential retirement contributions on trivial indulgences enough to make this option consistently dispreferred. If no ready-made precommitment device is available, she might be able to create a suitable one herself. If, for example, she is highly averse to breaking promises, she might be able to solve her problem by simply promising a concerned friend that she will henceforth deposit a certain percentage of her pay into a retirement fund.

In some cases, one might not be confident that one can arrange for external incentives that will get one's future self to voluntarily cooperate with one's current plans. One might therefore favor the related but more extreme strategy of making sure that one's future self does not have the power to thwart one's current plans. Rather than simply making cooperation more worthwhile (and thus, in a sense, more compelling), this strategy involves arranging for the use of force (which compels in a stronger sense of the term). A fictional but particularly famous employment of the strategy (which is discussed in, for example, Elster 1984) is its employment by Odysseus in Homer's Odyssey. Because he longed to hear the enchanting singing of the Sirens, but feared that he would thereby be lured into danger, Odysseus instructed his companions to tie him to the mast of his ship and to resist his (anticipated) attempts at freeing himself from the requested bonds.

2.3 Symbolic Utility

Another strategy for dealing with certain dynamic choice problems — this one proposed by Robert Nozick (1993) — is the strategy of investing actions with symbolic utility (or value) and then allowing oneself to be influenced not only by the causal significance of one's actions, but also by their symbolic significance. According to Nozick, “actions and outcomes can symbolize still further events … [and] draw upon themselves the emotional meaning (and utility…) of these other events” (26). If “we impute to actions… utilities coordinate with what they symbolize, and we strive to realize (or avoid) them as we would strive for what they stand for” (32), our choices will differ from what they would be if we considered only the causal significance of our actions. Consider, for example, the case of the self-torturer. Suppose the self-torturer has moved up ten settings in ten weeks. He is still in a very comfortable range, but he is starting to worry about ending up at a high setting that would leave him in excruciating pain. It occurs to him that he should quit while he is ahead, and he begins to symbolically associate moving up a setting at the next opportunity with moving up a setting at every upcoming opportunity. By the time the next opportunity to move up a setting comes around, the extremely negative symbolic significance of this potential action steers him away from performing the action. For a structurally similar but more down-to-earth example, consider someone who loves overeating but is averse to becoming overweight. If this individual comes to symbolically associate having an extra helping with overeating in general and thus with becoming overweight, he may be averse to having the extra helping, even if, in causal terms, what he does in this particular case is negligible.

2.4 Plans and Resoluteness

The three strategies discussed so far suggest that, to cope with dynamic choice problems, one must either mess with one's rationality or else somehow change the payoffs associated with the options one will face. Some philosophers — including, for example, Michael Bratman (1999; 2006), David Gauthier (1986; 1994), and Edward McClennen (1990; 1997) — have, however, suggested that the rational agent will not need to resort to such gimmicks as often as one might think — a good thing, since making the necessary arrangments can require a heavy investment of time, energy, and/or money. The key to their arguments is the idea that adopting plans can affect what it is rational for one to do even when the plans do not affect the payoffs associated with the options one will face. Relatedly, their arguments incorporate the idea that rationality at least sometimes calls for resolutely sticking to a plan even if the plan disallows an action that would fit as well or better with one's preferences than the action required by the plan. For Bratman, Gauthier, and McClennen, being resolute is not simply useful in coping with dynamic choice problems. Rather, it figures as part of a conception of rationality that resolves the theoretical puzzles concerning rationality and choice over time posed by various dynamic choice problems. In particular, it figures as part of a conception of rationality whose dictates provide intuitively sensible guidance not only in simple situations but also in challenging dynamic choice situations.

We are, as Michael Bratman (1983; 1987) stresses, planning creatures. Our reasoning is structured by our plans, which enable us to achieve complex personal and social goals. To benefit from planning, one must take plans seriously. For Bratman, this involves, among other things, (i) recognizing a general rational pressure favoring sticking to one's plan so long as there is no problem with the plan (Bratman 2006, section 8), and (ii) “taking seriously how one will see matters at the conclusion of one's plan, or at appropriate stages along the way, in the case of plans or policies that are ongoing” (1999, 86). According to Bratman, it follows from these requirements that rationality at least sometimes calls for resolutely sticking to a plan even if this is not called for by one's current preferences. Moreover, although the requirements demand showing some restraint in the pursuit of one's preferences, they prompt more sensible choices in challenging dynamic choice situations than do conceptions of rationality whose dictates do not take plans seriously.

The significance of the first requirement is easy to see. If there is a general rational pressure favoring sticking to one's plan so long as there is no problem with it, then a rational agent that takes plans seriously will not get into the sort of trouble Broome imagines Abraham might get into. When faced with incommensurable alternatives, the rational agent who takes plans seriously will adopt a plan and then stick to it even if his preferences are consistent with pursuing an alternative course of action.

What about the significance of the second requirement? For Bratman, if one is concerned about how one will see matters at the conclusion of one's plan or at appropriate stages along the way, then one will, other things equal, avoid adjusting one's plan in ways that one will regret in the future. So Bratman's planning conception of rationality includes a “no-regret condition.” And, according to Bratman, given this condition, his conception of rationality gives intuitively plausible guidance in cases of temptation like the case of the self-torturer or the retirement contribution case. In particular, it implies that, in such cases, the rational planner will adopt a plan and refrain from adjusting it. For in both sorts of cases, if the simple fact that one's preferences favor adjusting one's plan leads one to adjust it, one is bound to end up, via repeated adjustments of one's plan, in the situation one finds unacceptable. One is thus bound to experience future regret. And, while Bratman allows that regret can sometimes be misguided — which is why he does not present avoiding regret as an exceptionless imperative — there are not, for Bratman, any special considerations that would make regret misguided if one gave into temptation in cases like the case of the self-torturer or the retirement contribution case.

Based on their own reasoning concerning rational resoluteness, Gauthier (1994) and McClennen (1990; 1997) argue that rational resoluteness can help an agent do well in autonomous benefit cases like the toxin case. They maintain that being rational is not a matter of always choosing the action that best serves one's concerns. Rather it is a matter of acting in accordance with the deliberative procedure that best serves one's concerns. Now it might seem as though the deliberative procedure that best serves one's concerns must be the deliberative procedure that calls for always choosing the action that best serves one's concerns. But autonomous benefit cases like the toxin case suggest that this is not quite right. For, the deliberative procedure that calls for always choosing the action that best serves one's concerns does not serve one's concerns well in autonomous benefit cases. More specifically, someone who reasons in accordance with this deliberative procedure does worse in autonomous benefit cases than someone who is willing to resolutely stick to a prior plan that he did well to adopt. Accordingly, Gauthier and McClennen deny that the best deliberative procedure requires one to always choose the action that best serves one's concerns; in their view, the best deliberative procedure requires some resoluteness. Relatedly, they see drinking the toxin in accordance with a prior plan to drink the toxin as rational, indeed as rationally required, given that one did well to adopt the plan; so rationality helps one benefit, rather than hindering one from benefiting, in autonomous benefit cases like the toxin case.

Note that, while there is widespread agreement that a plausible conception of rationality will imply that the self-torturer should resist the temptation to keep advancing one more setting, there is no widespread agreement that a plausible conception of rationality will imply that it is rational to drink the toxin. For those who find the idea that it is rational to drink the toxin completely counter-intuitive, its emergence figures as a problematic, rather than welcome, implication of Gauthier's and McClennen's views concerning rational resoluteness.

If Bratman and/or Gauthier and McClennen are on the right track — and this is, of course, a big if — then rational resoluteness may often be the key to keeping oneself out of potential dynamic choice traps. It may also be the key to resolving various puzzles concerning rationality and dynamic choice.

3. Some Familiar Phenomena Illuminated by Dynamic Choice Theory

Although dynamic choice problems are often presented with the help of fanciful thought experiments, their interest is not strictly theoretical. As this section highlights, they can wreak havoc in our real lives, supporting phenomena such as self-destructive addictive behavior and dangerous environmental destruction.

According to the most familiar model of self-destructive addictive behavior, such behavior results from cravings that limit “the scope for volitional control of behavior” and can in some cases be irresistible, “overwhelm[ing] decision making altogether” (Loewenstein 1999, 235-6). But, as we know from dynamic choice theory, self-destructive behavior need not be compelled. It can also be supported by challenging choice situations and problematic preference structures that prompt dynamic choice problems. Reflection on this point has led to new ideas concerning possible sources of self-destructive addictive behavior. For example, George Ainslie (2001) has developed the view that addictive habits such as smoking — which can, it seems, flourish even in the absence of irresistible craving — are often supported by discounting-induced preference reversals. Given the possibility of discounting-induced preference reversals, even someone who cares deeply about having a healthy future, and who therefore does not want to be a heavy smoker, can easily find herself smoking cigarette after cigarette, where this figures as a series of indulgences that she plans against and then regrets.

Reflection on dynamic choice theory has also led to new ideas in environmental philosophy. For example, Chrisoula Andreou (2006) argues that, although dangerous environmental destruction is usually analyzed as resulting from interpersonal conflicts of interest, such destruction can flourish even in the absence of such conflicts. In particular, where individually negligible effects are involved, as is the case among “creeping environmental problems” such as pollution, “an agent, whether it be an individual or a unified collective, can be led down a course of destruction simply as a result of following its informed and perfectly understandable but intransitive preferences” (Andreou 2006, 96). Notice, for example, that if a unified collective values a healthy community, but also values luxuries whose production or use promotes a carcinogenic environment, it can find itself in a situation that is structurally similar to the situation of the self-torturer. Like the self-torturer, such a collective must cope with the fact that while one more day, and perhaps even one more month of indulgence can provide great rewards without bringing about any significant alterations in (physical or psychic) health, “sustained indulgence is far from innocuous” (Andreou 2006, 101).

4. Concluding Remarks

When one performs a series of actions that do not serve one's concerns well, it is natural to feel regret and frustration. Why, it might be wondered, is one doing so badly by oneself? Self-loathing, compulsion, or simple ignorance might in some cases explain the situation; but, oftentimes, none of these things seems to be at the root of the problem. For, in many cases, one's steps along a disadvantageous course seem voluntary, motivated by the prospect of some benefit, and performed in light of a correct understanding of the consequences of each step taken. As we have seen, dynamic choice theory makes it clear how such cases are possible.

Although an agent with a dynamic choice problem can often be described as insufficiently resolute, she is normally guided by her preferences or her evaluation of the options she faces. As such, she is not, in general, properly described as simply out of control. Still, the control she exhibits is inadequate with respect to the task of effectively governing her (temporally-extended) self. So her problem is, as least in part, a problem of self-governance.

Bibliography

- Ainslie, George, 1999. “The Dangers of Willpower,” in Getting Hooked, eds. Jon Elster and Ole-Jørgen Skog. Cambridge: Cambridge University Press.

- –––, 2001. Breakdown of Will. Cambridge: Cambridge University Press.

- Andreou, Chrisoula, 2005a. “Incommensurable Alternatives and Rational Choice,” Ratio, 18/3: 249-61.

- –––, 2005b. “Going from Bad (or Not So Bad) to Worse: On Harmful Addictions and Habits,” American Philosophical Quarterly 42/4: 323-31.

- –––, 2006. “Environmental Damage and the Puzzle of the Self-Torturer,” Philosophy & Public Affairs, 34/1: 95-108.

- –––, 2007. “There Are Preferences and Then There Are Preferences,” in Economics and the Mind, eds. Barbara Montero and Mark D. White. New York: Routledge.

- Bratman, Michael, 1983. “Taking Plans Seriously,” Social Theory and Practice, 9: 271-287.

- –––, 1987. Intentions, Plans, and Practical Reason. Cambridge: Harvard University Press.

- –––, 1999. “Toxin, Temptation, and the Stability of Intention,” in Faces of Intention, Cambridge: Cambridge University Press.

- –––, 2006. “Temptation Revisited,” in Structures of Agency, Oxford: Oxford University Press.

- Broome, John, 2001. ‘Are Intentions Reasons? And How Should We Cope with Incommensurable Values?’ in Practical Rationality and Preference, eds. Christopher W. Morris and Arthur Ripstein. Cambridge: Cambridge University Press.

- –––, 2000. ‘Incommensurable Values,’ in Well-Being and Morality: Essays in Honour of James Griffin, eds. Roger Crisp and Brad Hooker. Oxford: Oxford University Press.

- Chang, Ruth (ed), 1997. Incommensurability, Incomparability, and Practical Reason. Cambridge: Harvard University Press.

- Davidson, Donald, McKinsey, J. and Suppes, Patrick, 1955. “Outlines of a Formal Theory of Value,” Philosophy of Science, 22: 140-60.

- Elster, Jon, 1984. Ulysses and the Sirens. Cambridge: Cambridge University Press.

- –––, 2000. Ulysses Unbound. Cambridge: Cambridge University Press.

- Elster, Jon and Ole-Jørgen Skog (eds), 1999. Getting Hooked. Cambridge: Cambridge University Press.

- Gauthier, David, 1986. Morals by Agreement. Oxford: Clarendon Press.

- –––, 1994. “Assure and Threaten,” Ethics, 104/4: 690-716.

- Kavka, Gregory S., 1983. “The Toxin Puzzle,” Analysis, 43: 33-6.

- Loewenstein, George and Jon Elster (eds), 1992. Choice Over Time. New York: Russell Sage Foundation.

- Loewenstein, George, Daniel Read, and Roy Baumeister (eds), 2003. Time and Decision. New York: Russell Sage Foundation.

- Mele, Alfred, 2004. “Motivated Irrationality,” in The Oxford Handbook of Rationality. Oxford: Oxford University Press.

- McClennen, Edward, 1990. Rationality and Dynamic Choice, Cambridge: Cambridge University Press.

- –––, 1997. “Pragmatic Rationality and Rules,” Philosophy and Public Affairs, 26/3: 210-58.

- Nozick, Robert, 1993. The Nature of Rationality, Princeton: Princeton University Press.

- Parfit, Derek, 1984. Reasons and Persons. Oxford: Clarendon Press.

- Quinn, Warren, 1993. “The Puzzle of the Self-Torturer,” in Morality and Action. Cambridge: Cambridge University Press.

- Ramsey, Frank P., 1926. “Truth and Probability,” in The Foundations of Mathematics and other Logical Essays, ed. R. B. Braithwaite. London: Routledge & Kegan Paul, 1931.

- Raz, Joseph, 1997. ‘Incommensurability and Agency,’ in Incommensurability, Incomparability, and Practical Reason, Ruth Chang (ed). Cambridge: Harvard University Press.

- –––, 1986. The Morality of Freedom. Oxford: Clarendon Press.

- Schelling, Thomas C., 1960. The Strategy of Conflict. Cambridge: Harvard University Press.

- Tversky, Amos, 1969. “Intransitivity of Preferences,” Psychological Review, 76: 31-48.

Other Internet Resources

[Please contact the author with suggestions.]

Related Entries

economics, philosophy of | practical reason | value: pluralism