Economics and Economic Justice

Distributive justice is often considered not to belong to the scope of economics, but there is actually an important literature in economics that addresses normative issues in social and economic justice. A variety of economic theories and approaches provide many insights in these matters. Presented below are the theory of inequality and poverty measurement, welfare economics, the theory of social choice, the theory of bargaining and of cooperative games, and the theory of fair allocation. There has been a good deal of cross-fertilization between these different branches of normative economics and philosophical theories of justice, and many examples of such mutual influences are exhibited in this article.

- 1. Economics and Ethics

- 2. Inequality and Poverty Measurement

- 3. Welfare Economics

- 4. Social Choice

- 5. Bargaining and Cooperative Games

- 6. Fair allocation

- 7. Related topics

- Bibliography

- Other Internet Resources

- Related Entries

1. Economics and Ethics

The role of ethics in economic theorizing is still a debated issue. In spite of the reluctance of many economists to view normative issues as part and parcel of their discipline, normative economics now represents an impressive body of literature. One may, however, wonder if normative economics cannot also be considered a part of political philosophy.

1.1 Positive vs. Normative Economics

In the first half of the twentieth century, most leading economists (Pigou, Hicks, Kaldor, Samuelson, Arrow etc.) devoted a significant part of their research effort to normative issues, notably the definition of criteria for the evaluation of public policies. The situation is very different nowadays. “Economists do not devote a great deal of time to investigating the values on which their analyses are based. Welfare economics is not a subject which every present-day student of economics is expected to study”, writes Atkinson (2001, p. 195), who regrets “the strange disappearance of welfare economics”. Normative economics itself may be partly guilty for this state of affairs, in view of its repeated failure to provide conclusive results and its long-lasting focus on impossibility theorems (see § 4.1). But there has also been a persistent ambiguity about the status of normative propositions in economics. The subject matter of economics and its close relation to policy advice make it virtually impossible to avoid mingling with value judgments. Nonetheless, the desire to separate positive statements from normative statements has often been transformed into the illusion that economics could be a science only by shunning the latter. Robbins (1932) has been influential in this positivist move, in spite of a late clarification (Robbins 1981) that his intention was not to disparage normative issues, but only to clarify the normative status of (useful and necessary) interpersonal comparisons of welfare. It is worth emphasizing that many results in normative economics are mathematical theorems with a primary analytical function. Endowing them with a normative content may be confusing, because they are most useful in clarifying ethical values and do not imply by themselves that these values must be endorsed. “It is a legitimate exercise of economic analysis to examine the consequences of various value judgments, whether or not they are shared by the theorist.” (Samuelson 1947, p. 220) The role of ethical judgments in economics has received recent and valuable scrutiny in Sen (1987), Hausman and McPherson (1996) and Mongin (2001b).

1.2 Normative Economics and Political Philosophy

There have been many mutual influences between normative economics and political philosophy. In particular, Rawls' difference principle (Rawls 1971) has been instrumental in making economic analysis of redistributive policies pay some attention to the maximin criterion, which puts absolute priority on the worst-off, and not only to sum-utilitarianism. (It has taken more time for economists to realize that Rawls' difference principle applies to primary goods, not utilities.) Conversely, many concepts used by political philosophers come from various branches of normative economics (see below).

There are, however, differences in focus and in methodology. Political philosophy tends to focus on the general issue of social justice, whereas normative economics also covers microeconomic issues of resource allocation and the evaluation of public policies in an unjust society. Political philosophy focuses on arguments and basic principles, whereas normative economics is more concerned with the effective ranking of social states than the arguments underlying a given ranking. The difference is thin in this respect, since the axiomatic analysis in normative economics may be interpreted as performing not only a logical decomposition of a given ranking or principle, but also a clarification of the underlying basic principles or arguments. But consider for instance the “leveling-down objection” (Parfit 1995), which states that egalitarianism is wrong because it considers that there is something good in achieving equality by leveling down (even when the egalitarian ranking says that, all things considered, leveling down is bad). This kind of argument has to do with the reasons underlying a social judgment, not with the content of the all things considered judgment itself. It is hard to imagine if and how the leveling-down objection could be incorporated in the models of normative economics. A final difference between normative economics and political philosophy, indeed, lies in conceptual tools. Normative economics uses the formal apparatus of economics, which gives powerful means to derive non-intuitive conclusions from simple arguments, although it also deprives the analyst of the possibility of exploring issues that are hard to formalize.

There are now several general surveys of normative economics, some of which do also cover the intersection with political philosophy: Arrow, Sen and Suzumura (1997, 2002), Fleurbaey (1996), Hausman and McPherson (2006), Kolm (1996), Moulin (1988, 1995, 1998), Roemer (1996), Young (1994).

2. Inequality and Poverty Measurement

Although the development of the theory of inequality and poverty measurement is fairly recent, it makes sense to present it in first position, because it focuses on the simplest context of evaluation of social situations, namely, the context in which there is a well-defined measure of individual situations, amenable to all kinds of interpersonal comparisons. The traditional focus of this theory on income inequality is the reason for this rather optimistic assumption about the evaluation of individual well-being. A good deal of the rest of normative economics may be seen as devoted to grappling with the difficulties due to the recognition that individual well-being is primarily multi-dimensional and not easily synthesized in a single measure.

There are now excellent surveys of this theory: Chakravarty (1990), Cowell (2000), Dutta (2002), Lambert (1989), Sen and Foster (1997), Silber (1999).

2.1 Indices of Inequality and Poverty

The study of inequality and poverty indices started from a statistical, pragmatic perspective, with such indices as the Gini index of inequality or the poverty head count. Recent research has provided two valuable insights. First, it is possible to relate inequality indices to social welfare functions, so as to give inequality indices a more transparent ethical content. The idea is that an inequality index should not simply measure dispersion in a descriptive way, but would gain in relevance if it measured the harm to social welfare done by inequality. There is a simple method to derive an inequality index from a social welfare function, due to Kolm (1969) and popularized by Atkinson (1970), Sen (1973). Consider a social welfare function which is defined on distributions of income and is symmetrical (i.e., permuting the income of two individuals leaves social welfare unchanged). For any given unequal distribution of income, one may compute the egalitarian distribution of income which would yield the same social welfare as the unequal distribution. This is called the “equal-equivalent” distribution. If the social welfare function is averse to inequality, the total amount of income in the equal-equivalent distribution is less than in the unequal distribution. In other words, the social welfare function condones some sacrifice of total income in order to reach equality. This drop in income, measured in proportion of the initial total income, may serve as a valuable index of inequality. This index may also be used in a picturesque decomposition of social welfare. Indeed, an ordinally equivalent measure of social welfare is then total income (or average income – it does not matter when the population is fixed) times one minus the inequality index.

This method of construction of an index of inequality, often referred to as the ethical approach to inequality measurement, is most useful when the argument of the social welfare function, and the object of the measurement of inequality, is the distribution of individual well-being (which may or may not be measured by income). Then the social welfare function is indeed symmetrical (by requirement of impartiality) and its aversion to inequality reflects its underlying ethical principles. In other contexts, the method is more problematic. Consider the case when social welfare depends on individual well-being, and individual well-being depends on income with some individual variability due to differential needs. Then income equality may no longer be a valuable goal, because the needy individuals may need more income than others. Using this method to construct an index of inequality of well-being is fine, but using it to construct an index of inequality of incomes would be strange, although it would immediately reveal that income inequality is not always bad (when it compensates for unequal needs). Now consider the case when social welfare is the utilitarian sum of individual utilities, and all individuals have the same strictly concave utility function (strict concavity means that it displays a decreasing marginal utility). Then using this method to construct an index of income inequality is amenable to a different interpretation. The index then does not reflect a principled aversion to inequality in the social welfare function, since the social welfare function has no aversion to inequality of utilities. It only reflects the consequence of an empirical fact, the degree of concavity of individual utility functions. To call this the ethical approach, in this context, seems a misnomer.

The second valuable contribution of recent research in this field is the development of an alternative ethical approach through the axiomatic study of the properties of indices. The main ethical axioms deal with transfers. The Pigou-Dalton principle of transfers says that inequality decreases (or social welfare increases) when an even transfer is made from a richer to a poorer individual without reversing their pairwise ranking (although this may alter their ranking relative to other individuals). Since this condition is about even transfers, it is quite weak and other axioms have been proposed in order to strengthen the priority of the worst-off. The principle of diminishing transfers (Kolm 1976) says that a Pigou-Dalton transfer has a greater impact the lower it occurs in the distribution. The principle of proportional transfers (Fleurbaey and Michel 2001) says that an inefficient transfer in which what the donor gives and what the beneficiary receives is proportional to their initial positions increases social welfare. Similar transfer axioms have been adapted to the measurement of poverty. For instance, Sen (1976) proposed the condition saying that poverty increases when an even transfer is made from someone who is below the poverty line to a richer individual (below or above the line). The other axioms with which the axiomatic analysis has been made usually have a less obvious ethical appeal, and relate to decomposability of indices, scale invariance and the like. Characterization results have been obtained, which identify classes of indices satisfying particular lists of axioms. The two ethical approaches may be combined, when one takes as an axiom the condition that the index be derived from a social welfare function with particular features.

2.2 The Dominance Approach

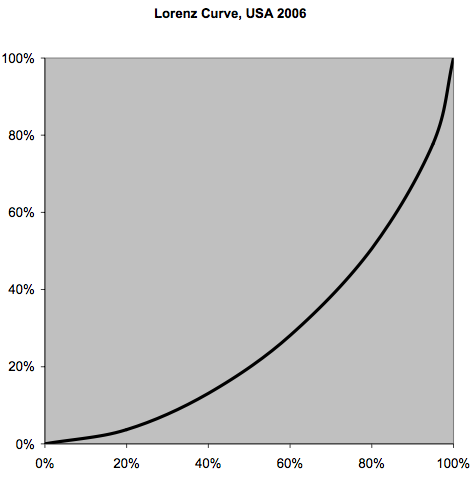

The multiplicity of indices, even when a restriction to special sub-classes may be justified by axiomatic characterization, raises a serious problem for applications. How can one make sure that a distribution is more or less unequal, or has more or less poverty, than another without checking an infinite number of indices? Although this may look like a purely practical issue, it has given rise to a broad range of deep results, relating the statistical concept of stochastic dominance to general properties of social welfare functions and to the satisfaction of transfer axioms by inequality and poverty indices. This approach, in particular, justifies the widespread use of Lorenz curves in the empirical studies of inequality. The Lorenz curve depicts the percentage of the total amount of whatever is measured, income, wealth or well-being, possessed by any given percentage of the poorest among the population. For instance, according to the Census Bureau, in 2006 the poorest 20%'s share of total income was 3.7%, the poorest 40%'s share was 13.1%, the poorest 60%'s share was 28.1%, the poorest 80%'s share was 50.6%, while the top 5%'s share was 22.2%. This indicates that the Lorenz curve is approximately as in the following figure.

2.3 Equality, Priority, Sufficiency

Philosophical interest in the measurement of inequality has recently risen (Temkin 1993). Most of this philosophical literature, however, tends to focus on defining the right foundations for an aversion to inequality. In particular, Parfit (1995) proposes to give priority to the worse-off not because of their relative position compared to the better-off, but because and to the extent that they are badly off. This probably corresponds to defining social welfare by an additively separable social welfare function, with diminishing marginal social utility (a social welfare function is additively separable when it is the sum of separate terms, each of which depends only on one individual's well-being). Interestingly, if egalitarianism is defined in opposition to this “priority view” by the feature that it relies on judgments of relative positions, this means that egalitarian values cannot be correctly represented by a separable social welfare function. This seems to raise the ethical stakes concerning properties of decomposability of indices or separability of social welfare functions, which are usually considered in economics merely as convenient conditions simplifying the functional forms (although separability may also be justified by the subsidiarity principle, according to which unconcerned individuals need not have a say in a decision). The content and importance of the distinction between egalitarianism and prioritarianism remains a matter of debate (see, among many others, Tungodden 2003, and the contributions in Holtug and Lippert-Rasmussen 2007). It is also interesting to notice that philosophers are often at ease to work with the notion of social welfare (or social good, or inequality) as a numerical quantity with cardinal meaning, whereas economists typically restrict their interpretation of social welfare or inequality to a purely ordinal ranking of social states. Beside egalitarian and prioritarian positions one must also mention the “sufficiency view”, defended e.g. by Frankfurt (1987) who argues that priority should be given only to those below a certain threshold. One may consider that this view supports the idea that poverty indices might summarize everything that is relevant about social welfare.

3. Welfare Economics

Welfare economics is the traditional generic label of normative economics, but, in spite of substantial variations between authors, it now tends to be associated with a particular subcontinent of this domain, maybe as a result of the development of “non-welfarist” approaches and of approaches with a broader scope, such as the theory of social choice.

Surveys on welfare economics in its restricted definition can be found in Graff (1957), Boadway and Bruce (1984), Chipman and Moore (1978), Samuelson (1981).

3.1 Old and New Welfare Economics

The proponents of a “new” welfare economics (Hicks, Kaldor, Scitovsky) have distanced themselves from their predecessors (Marshall, Pigou, Lerner) by abandoning the idea of making social welfare judgments on the basis of interpersonal comparisons of utility. Their problem was then that in absence of any kind of interpersonal comparisons, the only principle on which to ground their judgments was the Pareto principle, according to which a situation is a global improvement if it is an improvement for every member of the concerned population (there are variants of this principle depending on how individual improvement is defined, in terms of preferences or some notion of well-being, and depending on whether it is a strict improvement for all members or some of them stay put). Since most changes due to public policy hurt some subgroups for the benefit of others, the Pareto principle remains generally silent. The need for a less restrictive criterion of evaluation has led Kaldor (1939) and Hicks (1939) to propose an extension of the Pareto principle through compensation tests. According to Kaldor's criterion, a situation is a global improvement if ex post the gainers could compensate the losers. For Hicks' criterion, the condition is that ex ante the losers could not compensate the gainers (a change from situation A to situation B is approved by Hicks' criterion if the change from B to A is not approved by Kaldor's criterion). These criteria are much less partial than the Pareto principle, but they remain partial (that is, they fail to rank many pairs of alternatives). This is not, however, their main drawback. They have been criticized for two basic flaws. First, for plausible definitions of how the compensation transfers could be computed, these criteria may lead to inconsistent social judgments: the same criterion may simultaneously declare that a situation A is better than another situation B, and conversely. Scitovsky (1941) has proposed to combine the two criteria, but this does not prevent the occurrence of intransitive social judgments. Second, the compensation tests have a dubious ethical value. If the compensatory transfers are performed in Kaldor's criterion, then the Pareto criterion alone suffices since after compensation everybody gains. If the compensatory transfers are not performed, the losers remain losers and the mere possibility of compensation is a meager consolation to them. Such criteria are then typically biased in favor of the rich whose willingness to pay is generally high (i.e., they are willing to give a lot in order to obtain whatever they want, and therefore they can easily compensate the losers; when they do not actually pay the compensation, they can have the cake and eat it too).

Cost-benefit analysis has more recently developed criteria which are very similar and rely on the summation of willingness to pay across the population. In spite of repeated criticism by specialists (Arrow 1951, Boadway and Bruce 1984, Sen 1979, Blackorby and Donaldson 1990), practitioners of cost-benefit analysis and some branches of economic theory (industrial organization, international economics) still commonly rely on such criteria. More sophisticated variants of cost-benefit analysis (Layard and Glaister 1994, Drèze and Stern 1987) avoid these problems by relying on weighted sums of willingness to pay or even on consistent social welfare functions. Many specialists of public economics (e.g. Stiglitz 1987) have considered that the Pareto criterion was the core ethical principle on which economists should buttress their social evaluations, denouncing all sources of inefficiency in social organizations and public policies.

A subfield of welfare economics focused on the possibility of making social welfare judgments on the basis of national income. An increase in national income may reflect an increase in social welfare under some stringent assumptions, most conspicuously the assumption that the distribution of incomes is socially optimal. Although very restrictive, this kind of result has a lasting influence, in theory (international economics) and in practice (the salience of GDP growth in policy discussions). There exists a school of social indicators (see the Social Indicators Research journal) which fights this influence and the number of alternative indicators (of happiness, genuine progress, social health, economic well-being, etc.) has soared in the last decades (see e.g. Miringoff and Miringoff 1999, Frey and Stutzer 2002, Kahneman et al. 2004 and Gadrey and Jany-Catrice 2006).

Bergson (1938) and Samuelson (1947, 1981) occupy a special position, which may be described as a third way between old and new welfare economics. From the former, they retain the goal of making complete and consistent social welfare judgments with the help of well-defined social welfare functions. The formula W(U1(x),…,Un( x)) is often named a “Bergson-Samuelson social welfare function” (x is the social state; Ui(x), for i=1,…,n, is individual i's utility in this state). With the latter, however, they share the idea that only ordinal non-comparable information should be retained about individual preferences. This may seem contradictory with the formula of the Bergson-Samuelson social welfare function, in which individual utility functions appear, and there has been a controversy about the possibility of constructing a Bergson-Samuelson social welfare function on the sole basis of individual ordinal non-comparable preferences (see in particular Arrow (1951), Kemp and Ng (1976), Samuelson (1977, 1987), Sen (1986) and a recent discussion in Fleurbaey and Mongin (2005)). Samuelson and his defenders are commonly considered to have lost the contest, but it may also be argued that their opponents have misunderstood them. Indeed, individual utility functions in the W(U1(x),…,Un( x)) formula are, according to Bergson and Samuelson, to be constructed out of individual preference orderings, on the basis of fairness principles. The logical possibility of such a construction has been repeatedly proven by Samuelson (1977), Pazner (1979), Mayston (1974, 1982). The fact that such a construction does not require any other information than ordinal non-comparable preferences is indisputable. Bergson and Samuelson acknowledged the need for interpersonal comparisons, but considered that these could be done, in an ethically relevant way, on the sole basis of non-comparable preference orderings. They failed, however, to be more specific about the fairness principles on which the construction could be justified. The theory of fair allocation (see § 6) may fill the gap.

3.2 Harsanyi's Theorems

Harsanyi may be viewed as the last representative of the old welfare economics, to which he made a major contribution in the form of two arguments. The first one is often called the “impartial observer argument”. An impartial observer should decide for society as if she had an equal chance of becoming anyone in the considered population. This is a risky situation in which the standard decision criterion is expected utility. The computation of expected utility, in this equal probability case, yields an arithmetic mean of the utilities that the observer would have if she became anyone in the population. Harsanyi (1953) considers this to be an argument in favor of utilitarianism. The obvious weakness of the argument, however, is that not all versions of utilitarianism would measure individual utility in a way that may be entered in the computation of the expected utility of the impartial observer. In other words, ask a utilitarian to compute social welfare, and ask an impartial observer to compute her expected utility. There is little reason to believe that they will come up with similar conclusions, even though both compute a sum or a mean. For instance, a very risk-averse impartial observer may come arbitrarily close to the maximin criterion.

This argument has triggered controversies, in particular with Rawls (1974), about the soundness of the maximin criterion in the original position, and with Sen (1977b). See Harsanyi (1976) and recent analyses in Weymark (1991), Mongin (2001a). There is a related, but different controversy about the consequences of the veil of ignorance in Dworkin's hypothetical insurance scheme (Dworkin 2000). Roemer (1985) argues that if individuals maximize their expected utility on the insurance market, they insure against states in which they have low marginal utility. If low marginal utility happens to be the consequence of some handicaps, then the hypothetical market will tax the disabled for the benefit of the others, a paradoxical but typical consequence of utilitarian policies. It is indeed well known that insurance markets have strange consequences when utilities are state-dependent (that is, when the utility of income is affected by random events). For a recent revival of this controversy, see Dworkin (2002), Fleurbaey (2002) and Roemer (2002a).

Harsanyi's second argument, the “aggregation theorem”, is about a social planner who, facing risky prospects, maximizes expected social welfare and wants to respect individual preferences about prospects. Harsanyi (1955) shows that these two conditions imply that social welfare must be a weighted sum of individual utilities, and concludes that this is another argument in favor of utilitarianism. Recent evaluation of this argument and its consequences may be found in Broome (1991), Weymark (1991). In particular, Broome uses the structure of this argument to conclude that social good must be computed as the sum of individual goods, although this does not preclude incorporating a good deal of inequality aversion in the measurement of individual good. Diamond (1967) has raised a famous objection against the idea that expected utility is a good criterion for the social planner. This criterion implies that if the social planner is indifferent between the distributions of utilities, for two individuals, (1,0) and (0,1), then he must also be indifferent between these two distributions and an equal probability of getting either distribution. This is paradoxical, this lottery being ex ante better since it gives equal prospects to individuals. Broome (1991) raises another puzzle. An even better lottery would yield either (0,0) or (1,1) with equal probability. It is better because ex ante it gives individuals the same prospects as the previous lottery, and it is more egalitarian ex post. The problem is that it seems quite hard to construct a social criterion which ranks these four alternatives as suggested here. Defining social welfare under uncertainty is still a matter of bafflement. See Deschamps and Gevers (1979), Ben Porath, Gilboa and Schmeidler (1997). Things are even more difficult when probabilities are subjective and individual beliefs may differ. Harsanyi's aggregation theorem then transforms into an impossibility theorem. On this, see e.g. Mongin (1995), Bradley (2005).

4. Social Choice

The theory of social choice originated in Arrow's failed attempt to systematize the Bergson-Samuelson approach (see Arrow 1983, p.26). It developed into an immense literature, with many ramifications to a variety of subfields and topics. The social choice framework is, potentially, so general that one may think of using it to unify normative economics. In a restrictive definition, however, social choice is considered to deal with the problem of synthesizing heterogeneous individual preferences into a consistent ranking. Sometimes an even more restrictive notion of “Arrovian social choice” is used to name works which faithfully adopt Arrow's particular axioms.

There are many surveys of social choice theory, in broad and restrictive senses: Arrow, Sen and Suzumura (1997, in particular chap. 3, 4, 7, 11, 15; 2002, in particular chap. 1, 2, 3, 4, 7, 10), Sen (1970, 1977a, 1986, 1999).

4.1 Arrow's Theorem

In an attempt to construct a consistent social ranking of a set of alternatives on the basis of individual preferences over this set, Arrow (1951) obtained: 1) an impossibility theorem; 2) a generalization of the framework of welfare economics, covering all collective decisions from political democracy and committee decisions to market allocation; 3) an axiomatic method which set a standard of rigor for any future endeavor.

The impossibility theorem roughly says that there is no general way to rank a given set of (more than two) alternatives on the basis of (at least two) individual preferences, if one wants to respect three conditions: (Weak Pareto) unanimous preferences are always respected (if everyone prefers A to B, then A is better than B); (Independence of Irrelevant Alternatives) any subset of two alternatives must be ranked on the sole basis of individual preferences over this subset; (No-Dictatorship) no individual is a dictator in the sense that his strict preferences are always obeyed by the ranking, no matter what they and the other individuals' preferences are. The impossibility holds when one wants to cover a great variety of possible profiles of individual preferences. When there is sufficient homogeneity among preferences, for instance when alternatives differ only in one dimension and individual preferences are based on the distance of alternatives to their preferred alternative along this dimension (think, for instance, of political options on the left-right spectrum), then consistent methods exist (the majority rule, in this example; Black 1958).

Arrow's result clearly extends the scope of analysis beyond the traditional focus of welfare economics, and nicely illuminates the difficulties of democratic voting procedures such as the Condorcet paradox (consisting of the fact that majority rule may be intransitive). The analysis of voting procedures is wide domain. For recent surveys, see e.g. Saari (2001) and Brams and Fishburn (2002). This analysis reveals a deep tension between rules based on the majority principle and rules which protect minorities by taking account of preferences in a more extended way (see Pattanaik 2002).

Specialists of welfare economics once claimed that Arrow's result had no bearing on economic allocation (e.g. Samuelson 1967), and there is some ambiguity in Arrow (1951) about whether, in an economic context, the best application of the theorem is about individual self-centered tastes over personal consumptions, in which case it is indeed relevant to welfare economics, or about individual ethical values about general allocations. It is now generally considered that the formal framework of social choice can sensibly be applied to the Bergson-Samuelson problem of ranking allocations on the basis of individual tastes. Applications of Arrow's theorem to various economic contexts have been made (see the surveys by Le Breton 1997, Le Breton and Weymark 2002).

4.2 The Informational Basis

Sen (1970a) proposes a further generalization of the social choice framework, by permitting consideration of information about individual utility functions, not only preferences. This enlargement is motivated by the impossibility theorem, but also by the ethical relevance of various kinds of data. Distributional issues obviously require interpersonal comparisons of well-being. For instance, an egalitarian evaluation of allocations needs a determination of who the worst-off are. It is tempting to think of such comparisons in terms of utilities. This has triggered an important body of literature which has greatly clarified the meaning of various kinds of interpersonal utility comparisons (of levels, differences, etc.) and the relation between them and various social criteria (egalitarianism, utilitarianism, etc.). This literature (esp. d'Aspremont and Gevers 1977) has also provided an important formal analysis of the concept of welfarism, showing that it contains two subcomponents. The first one is the Paretian condition that an alternative is equivalent to another when all individuals are indifferent between them. This excludes using non-welfarist information about alternatives, but does not exclude using non-welfarist information about individuals (one individual may be favored because of a physical handicap). The second one is an independence condition formulated in terms of utilities. It may be called Independence of Irrelevant Utilities (Hammond 1987), and says that the social ranking of any pair of alternatives must depend only on utility levels at these two alternatives, so that a change in the profile of utility functions which would leave the utility levels unchanged at the two alternatives should not alter how they are ranked. This excludes using non-welfarist information about individuals, but does not exclude using non-welfarist information about alternatives (one may be preferred because it has more freedom). The combination of the two conditions excludes all non-welfare information. Excellent surveys are made in d'Aspremont (1985), d'Aspremont and Gevers (2002), Bossert and Weymark (2000), Mongin and d'Aspremont (1998). In spite of the important clarification progress made by this literature, the introduction of utility functions essentially amounts to going back to old welfare economics, after the failures of new welfare economics, Bergson, Samuelson and Arrow to provide appealing solutions with data on consumer tastes only.

A related issue is how the evaluation of individual well-being must be made, or, equivalently, how interpersonal comparisons must be performed. Welfare economics traditionally relied on “utility”, and the extended informational basis of social choice is mostly formulated with utilities (although the use of extended preference orderings is often shown to be formally equivalent: for instance, saying that Jones is better-off than Smith is equivalent to saying that it is better to be Jones than to be Smith, in some social state). But utility functions may be given a variety of substantial interpretations, so that the same formalism may be used to discuss interpersonal comparisons of resources, opportunities, capabilities and the like. In other words, one may separate two issues: 1) whether one needs more information than individual preference orderings in order to perform interpersonal comparisons; 2) what kind of additional information is ethically relevant (subjective utility or objective notions of opportunities, etc.). The latter issue is directly related to philosophical discussions about how well-being should be conceived and to the “equality of what” debate.

The former issue is still debated. Extending the informational basis by introducing numerical indices of well-being (or equivalent extended orderings) is not the only conceivable extension. Arrow's impossibility is obtained with the condition of Independence of Irrelevant Alternatives, which may be logically analyzed, when the theorem is reformulated with utility functions as primitive data, as the combination of Independence of Irrelevant Utilities (defined above) with a condition of ordinal non-comparability, saying that the ranking of two alternatives must depend only on individuals' ordinal non-comparable preferences. Arrow's impossibility may be avoided by relaxing the ordinal non-comparability condition, and this is the above-described extension of the informational basis by relying on utility functions. But Arrow's impossibility may also be avoided by relaxing Independence of Irrelevant Utilities only. In particular, it makes sense to rank alternatives on the basis of how these alternatives are considered by individuals in comparison to other alternatives. For instance, when considering to make a transfer of consumption goods from Jones to Smith, it is not enough to know that Jones is against it and Smith is in favor of it (this is the only information usable under Arrow's condition). It is also relevant to know if both consider that Jones has a better bundle, or not, which involves considering other alternatives in which bundles are permuted, for instance. In this vein, Hansson (1973) and Pazner (1979) have proposed to weaken Arrow's axiom so as to make the ranking of two alternatives depend on the indifference curves of individuals at these two alternatives. In particular, Pazner relates this approach to Samuelson's (Samuelson 1977), and concludes that the Bergson-Samuelson social welfare function can indeed be constructed consistently in this way. Interpersonal comparisons may be sensibly made on the sole basis of indifference curves and therefore on the sole basis of ordinal non-comparable preferences. This requires broadening the concept of interpersonal comparisons in order to cover all kinds of comparisons, not just utility comparisons (see Fleurbaey and Hammond 2004).

The concept of informational basis itself need not be limited to issues of interpersonal comparisons. Many conditions of equity, efficiency, separability, responsibility, etc. bear on the kind and quantity of information that is deemed relevant for the ranking of alternatives. The theory of social choice gives a convenient framework for a rigorous analysis of this issue (Fleurbaey 2003a).

4.3 Around Utilitarianism

The theory of social choice with utility functions has greatly systematized our understanding of social welfare functions. For instance, it has shown how to construct a continuum of intermediate social welfare functions between sum-utilitarianism and the maximin criterion (or its lexicographic refinement, the leximin criterion, which ranks distributions of well-being by examining first the worst-off position, then the position which is just above the worst-off, and so on; for instance, the maximin is indifferent between the three distributions (1,2,5), (1,3,5) and (1,3,6), whereas the leximin ranks them in increasing order). Three other developments around utilitarian social welfare functions are worth mentioning.

The first development is related to the application of theories of equality of opportunity, and involves the construction of mixed social welfare functions which combine utilitarianism and maximin. Suppose that there is a double partition of the population, such that one would like the social welfare function to display infinite inequality aversion within subgroups of the first partition, and zero inequality aversion within subgroups of the second partition. For instance, subgroups of the first partition consist of equally deserving individuals, for which one would like to obtain equality of outcomes, whereas subgroups of the second partition consist of individuals who have equal opportunities, so that inequalities among them do not matter. Van de gaer (1993) proposes to apply average utilitarianism within each subgroup of the second partition, and to apply the maximin criterion to the vector of average utilities obtained in this way. In other words, the average utilities measure the value of the opportunity sets offered to individuals, and one applies the maximin criterion to such values, in order to equalize the value of opportunity sets. Roemer (1998) proposes to apply the maximin criterion within each subgroup of the first partition, and then to apply average utilitarianism to vector of minimum utilities obtained in this way. In other words, one tries to equalize outcomes for equally deserving individuals first, and then applies a utilitarian calculus. These may not be the only possible combinations of utilitarianism and maximin, but they are given an axiomatic justification which suggests that they are indeed salient, in Ooghe, Schokkaert and Van de gaer (2007) and Fleurbaey (2008). For a survey on the applications of Roemer's criterion, see Roemer (2002b). For general surveys, comparing these social welfare functions to related approaches, see Fleurbaey and Maniquet (2000) and Fleurbaey (2008).

Another interesting development deals with intergenerational ethics. With an infinite horizon, it is essentially impossible to combine the Pareto criterion and anonymity (permuting the utilities of some generations does not change social welfare) in a fully satisfactory way, even when utilities are perfectly comparable. This is a similar but more basic impossibility than Arrow's theorem. The intuition of the problem can be given with the following simple example. Consider the following sequence of utilities: (1,2,1,2,…). Permute the utility of every odd period with the next one. One then obtains (2,1,2,1,…). Then permute the utility of every even period with the next one. This yields (2,2,1,2,…). This third sequence Pareto-dominates the first one, even though it was obtained only through simple permutations. This impossibility is now better understood, and various results point to the “catching up” criterion as the most reasonable extension of sum-utilitarianism to the infinite horizon setting. This criterion, which does not rank all alternatives, applies when the finite-horizon sums of utilities, for two infinite sequences of utilities, are ranked in the same way for all finite horizons above some finite time. Interestingly, this topic has seen parallel and sometimes independent contributions by economists and philosophers (see e.g. Lauwers and Liedekerke 1997, Lauwers and Vallentyne 2003, Roemer and Suzumura 2007).

A third development worth mentioning has to do with population ethics. Sum-utilitarianism appears to be overly populationist, since it implies the “repugnant conclusion” (Parfit 1984) that we should aim for an unhappy but sufficiently large population in preference to a small and happy one. Conversely, average utilitarianism is “Malthusian”, preferring a happier population, no matter how small, to a less happy one, no matter how large. Here again there is an interesting tension, namely, between accepting all individuals whose utility is greater than zero, accepting equalization of utilities, and avoiding the “repugnant conclusion”. This tension is shown in this way. Start with a given affluent population of any size. Add any number of individuals with positive but almost zero utilities. This does not reduce social welfare. Then equalize utilities, which again does not reduce social welfare. One then obtains, compared to the initial population, a larger population with lower utilities. One sees that these lower utilities may be arbitrarily low, if the added individuals are sufficiently numerous and have sufficiently low initial utilities, thus yielding the repugnant conclusion (see Arrhenius 2000). Average utilitarianism disvalues additional individuals whose utility is below the average, which is very restrictive for affluent populations. A less restrictive approach is that of critical-level utilitarianism, which disvalues only individuals whose utility level is below some fixed, low but positive threshold. For an extensive overview and a defense of critical-level utilitarianism, see Blackorby, Bossert and Donaldson (1997, 2004). See also Broome (2004).

5. Bargaining and Cooperative Games

At the time when Arrow declared social choice to be impossible, Nash (1950) published a possibility theorem for the bargaining problem, which is the problem of finding an option acceptable to two parties, among a subset of alternatives. Interestingly, Nash relied on the axiomatic analysis just like Arrow, so that both can be given credit for the introduction of this method in normative economics. In the same decade, a similar contribution was made by Shapley (1953) to the theory of cooperative games. The development of such approaches has been impressive since then, but some questioning has emerged regarding the ethical relevance of this theory to issues of distributive justice.

5.1 Nash's and Other Solutions

Nash (1950) adopted a welfarist framework, in which alternatives are described only by the utility levels they give to the two parties. His solution consists in choosing the alternative which, in the feasible set, maximizes the product of individual utility gains from the disagreement point (this point is the fallback option when the parties fail to reach an agreement). This solution is therefore related to a particular social welfare function which is somehow intermediate between sum-utilitarianism and the maximin criterion. Contrary to these, however, it is invariant to independent changes in utility zeros and scales, which means that it can be applied with utility functions which are defined only up to an affine transformation (i.e., no difference is made between utility function Ui and utility function ai Ui + bi), such as Von Neumann-Morgenstern utility functions. Nash uses this invariance property in his axiomatic characterization of the solution. He also uses another property, which holds for any solution maximizing a social welfare function, namely, that removing non-selected options does not alter the choice.

This particular property is criticized in Kalai and Smorodinsky (1975), because it makes the solution ignore the relative size of the sacrifices made by the parties in order to reach a compromise. They propose another solution, which consists of equalizing the parties' sacrifice relative to the maximum gain they could expect in the available set of options. This solution, contrary to Nash's, guarantees that an enlargement of the set of options that is favorable to one party never hurts this party in the ultimate selection. It is very similar to Gauthier's (1986) “minimax relative concession” solution. Many other solutions to the bargaining problem have been proposed, but these two are by far the most prominent.

The relevance of bargaining theory to the theory of distributive justice has been questioned. First, if the disagreement point is defined, as it probably should, in relation to the relative strength of parties in a “state of nature”, then the scope for redistributive solidarity is very limited. One obtains a theory of “justice as mutual advantage” (Barry 1989, 1995) which is not satisfactory at the bar of any minimal conception of impartiality or equality. Second, the welfarist formal framework of the theory of bargaining is poor in information (Roemer 1986b, 1996). Describing alternatives only in terms of utility levels makes it impossible to take account of basic physical features of allocations. For instance, it is impossible to find out, from utility data alone, which of the alternatives is a competitive equilibrium with equal shares. As another illustration, Nash's and Kalai and Smorodinsky's solutions both recommend allocating an indivisible prize by a fifty-fifty lottery, whether the prize is symmetric (a one-dollar bill for either party) or asymmetric (a one-dollar bill if party 1 wins, ten dollars if party 2 wins).

Extensive surveys of bargaining theory can be found in Peters (1992), Thomson (1999).

5.2 Axiomatic Bargaining and Cooperative Games

The basic theory of bargaining focuses on the two-party case, but it can readily be extended to the case when a greater number of parties are at the table. However, when there are more than two parties, it becomes relevant to consider the possibility for subgroups (coalitions) to reach separate agreements. Such considerations lead to the broader theory of cooperative games.

This broader theory is, however, more developed for the relatively easy case when coalition gains are like money prizes which can be allocated arbitrarily among coalition members (the “transferable utility case”). In this case, for the two-party bargaining problem the Nash and Kalai-Smorodinsky solutions coincide and give equal gains to the two parties. The Shapley value is a solution which generalizes this to any number of parties and gives a party the average value of the marginal contribution that this party brings to all coalitions which it can join. In other words, it rewards the parties in proportion to the increase in coalition gain that they bring about by gathering with others.

Another important concept is the core. This notion generalizes the idea that no rational party would accept an agreement that is less favorable than the disagreement point. An allocation of the total population prize is in the core if the total amount received by any coalition is at least as great as the prize this coalition could obtain on its own. Otherwise, obviously, the coalition has an incentive to “block” the agreement. Interestingly, the Shapley value is not always in the core, except for “convex games”, that is, games such that the marginal contribution of a party to a coalition increases when the coalition is bigger.

The basics of this theory are very well presented in Moulin (1988), Myerson (1991). Cooperative games are distinguished from non-cooperative games by the fact that the players can commit to an agreement, whereas in a non-cooperative game every player always seeks his interest and never commits to a particular strategy. The central concept of the theory of non-cooperative games is the Nash equilibrium (every player chooses his best strategy, taking others' strategies as given), which has nothing to do with Nash's bargaining solution. It has been shown, however, that Nash's bargaining solution can be obtained as the Nash equilibrium of a non-cooperative bargaining game, in which players make offers alternatively, and accept or reject the other's offer.

6. Fair allocation

The theory of fair allocation studies the allocation of resources in economic models. The seminal contribution for this theory is Kolm (1972), where the criterion of equity as no-envy is extensively analyzed with the conceptual tools of general equilibrium theory. Later the theory borrowed the axiomatic method from bargaining theory, and it now covers a great variety of economic models and encompasses a variety of fairness concepts.

There are several surveys of this theory: Thomson and Varian (1985), Moulin and Thomson (1997), Maniquet (1999). The most exhaustive is Thomson (1998).

6.1 Equity as No-Envy

An allocation is envy-free if no individual would prefer having the bundle of another. An egalitarian distribution in which everyone has the same bundle is trivially envy-free, but is generally Pareto-inefficient, which means that there exist other feasible allocations that are better for some individuals and worse for none. A competitive equilibrium with equal shares (i.e., equal budgets) is the central example of a Pareto-efficient and envy-free allocation. It is envy-free since all agents have the same budget options, so that everyone could buy everyone's bundle. It is Pareto-efficient because, by an important theorem of welfare economics, any perfectly competitive equilibrium is Pareto-efficient (in absence of asymmetric information, externalities, public goods).

This concept of equity does not need any other information than individual ordinal preferences. It is not welfarist, in the sense that from utility data alone it is impossible to distinguish an envy-free allocation from an allocation with envy. Moreover, an envy-free allocation may be Pareto-indifferent (everyone is indifferent) to another allocation that has envy. On the other hand, this concept is strongly egalitarian, and it is quite natural to view it as capturing the idea of equality of resources (Dworkin 2000). When resources are multi-dimensional, for instance when there are several consumption goods, and when individual preferences are heterogeneous, it is not obvious to define equality of resources, but the no-envy criterion seems the best concept for this purpose. It guarantees that no individual will consider that another has a better bundle than his. It has been shown by Varian (1976) that, if preferences are sufficiently diverse and numerous (a continuum), then the competitive equilibrium with equal shares is the only Pareto-efficient and envy-free allocation.

This concept can also be related to the idea of equality of opportunities (Kolm 1996). An allocation is envy-free if and only if the bundles granted to everyone could have been chosen by each individual in the same opportunity set, such as, for instance, the set containing all the bundles of the allocation under consideration. Along this vein, the concept of no-envy can also be shown to have a close connection with incentive considerations. A no-envy test is used in the theory of optimal taxation in order to make sure that no one would have interest to lie about one's preferences (Boadway and Keen 2000). Consider the condition that, when an allocation is selected and some individuals' preferences change so that their bundle goes up in their own preference ranking, then the selected allocation is still acceptable. A particular version of this condition plays a central role in the theory of incentives, under the name of Maskin monotonicity (see e.g. Jackson 2001), but it can also be given an ethical meaning, in terms of neutrality with respect to changes in preferences. Notice that envy-free allocations satisfy this condition, since after such a change of preferences every individual's bundle goes up in his ranking, thereby precluding any appearance of envy. Conversely, it turns out that this condition implies that the selected allocation must be envy-free, under the additional assumption that, in any selected allocation, individuals with identical preferences must have equivalent bundles. If one also requires the selection to be Pareto-efficient, then one obtains a characterization of the competitive equilibrium with equal shares (Gevers 1986).

6.2 Extensions

Kolm's (1972) seminal monograph focused on the simple problem of distributing a bundle of non-produced commodities, and on equity as no-envy. Other economic problems, and other fairness concepts, have been studied later. Here is a non-exhaustive list of other economic problems that have been analyzed: sharing labor and consumption in the production of a consumption good; producing a public good and allocating the contribution burden among individuals; distributing indivisible commodities, with or without the possibility of making monetary compensations; matching pairs of individuals (men-women, employers-workers…); distributing compensations for differential needs; rationing on the basis of claims; distributing a divisible commodity when preferences are satiable. In the main stream of this theory, the problem is to select a good subset of allocations under perfect knowledge of the characteristics of the population and of the feasible set. There is also a branch which studies cost and surplus sharing, when the only information available are the quantities demanded or contributed by the population, and the cost or surplus may be distributed as a function of these quantities only (see Moulin 2002). The relevance of this literature for political philosophers should not be underestimated. Even models which seem to be devoted to narrow microeconomic allocation problems may turn out to be quite relevant, and some models are addressing issues already salient in political philosophy. This is the case in particular for the model of production of a private good when individuals have unequal skills, which is a rough description of a market economy, and for the model of differential needs. Both models are especially relevant to analyze the issue of responsibility, talent and handicap, which is now prominent in egalitarian theories of justice. A survey on these two models in made in Fleurbaey and Maniquet (2000), and a survey connecting the various relevant fields of economic analysis to theories of responsibility-sensitive egalitarianism is in Fleurbaey (1998).

Among the other concepts of fairness which have been introduced, two families are important. The first family contains principles of solidarity, which require individuals to be affected in the same way (they all gain or all lose) by some external shock (change in resources, technology, population size, population characteristics). For instance, if resources or technology improve, then it is natural to hope that everyone will benefit. The second family contains welfare bounds, which provide guarantees to everyone against extreme inequality. For instance, in the division of non-produced commodities, it is very natural to require that nobody should be worse-off than at the equal-split allocation (i.e. the allocation in which everyone gets the per capita amount of resources).

Let us briefly describe some of the insights that are gained through this theory and seem relevant to political philosophy. A very important one is that there is a conflict between no-envy and solidarity (Moulin and Thomson 1988, 1997). This conflict is well illustrated by the fact that in a market economy, typically any change in technology benefits some agents and hurts others, even when the change is a pure progress which could benefit all. Solidarity principles are not obeyed by allocation rules which pass the no-envy test, and these principles point toward a different kind of distribution, named “egalitarian-equivalence” by Pazner and Schmeidler (1978). An allocation is egalitarian-equivalent when everyone is indifferent between his bundle in this allocation and the bundle he would have in an egalitarian economy defined in some simple way. For instance, the egalitarian economy may be such that everyone has the same bundle. In this case, an egalitarian-equivalent allocation is such that everyone is indifferent between his bundle and one particular bundle. In more sophisticated versions, the egalitarian economy is such that everyone has the same budget set, in some particular family of budget sets. Egalitarian-equivalence is a serious alternative to no-envy for the definition of equality of resources, and its superiority in terms of solidarity is quite significant, in relation to the next point.

The second insight, indeed, is that no-envy itself is a combination of conflicting principles (Fleurbaey and Maniquet 2000, Fleurbaey 2008). This conflict is made apparent in models with talents and handicaps. For instance, Pazner and Schmeidler (1974) found out that there may not exist envy-free and Pareto-efficient allocations in the context of production with unequal skills (when there are high-skilled individuals who are strongly averse to labor). This results from an incompatibility between a compensation principle saying that individuals with identical preferences should have equivalent bundles (suppressing inequalities due to skills), and a reward principle saying that individuals with the same skills should not envy each other (no preferential treatment on the basis of different preferences). Both principles are a logical implication of the no-envy test. This is obvious for the latter. For the former, notice that no-envy among individuals with the same preferences means that they must have bundles on the same indifference curve. Interestingly, the compensation principle is a logical consequence of solidarity principles and is therefore perfectly compatible with them. It is very well satisfied by egalitarian-equivalent allocation rules. In contrast, it is violated by Dworkin's hypothetical insurance which applies the no-envy test behind a veil of ignorance (see Dworkin 2000, Fleurbaey 2008, and § 3.2).

The theory of fair allocations contains many positive results about the existence of fair allocations, for various fairness concepts, and this stands in contrast to Arrow's impossibility theorem in the theory of social choice. The difference between the two theories has often been interpreted as due to the fact that they perform different exercises (Sen 1986, Moulin and Thomson 1997). The theory of social choice, it is said, seeks a ranking of all options, while the theory of fair allocation focuses on the selection of a subset of allocations. This explanation is not convincing, since selecting a subset of fair allocations is formally equivalent to defining a full-blown albeit coarse ranking, with “good” and “bad” allocations. A more convincing explanation lies in the fact that the information used in fairness criteria is richer than allowed by Arrow's Independence of Irrelevant Alternatives (Fleurbaey, Suzumura and Tadenuma 2002). For instance, in order to check that an allocation is envy-free while another displays envy, it is not enough to know how individuals rank these two allocations in their preferences. One must know individual preferences over other alternatives involving permutations of bundles (an envious individual would prefer an allocation in which his bundle is permuted with one he envies). In this vein, one discovers that it is possible to extend the theory of fair allocation so as to construct fine-grained rankings of all allocations. This is very useful for the discussion of public policies in “second-best” settings, that is, in settings where incentive constraints make it impossible to reach Pareto-efficiency. With this extension, the theory of fair allocation can be connected to the theory of optimal taxation (Maniquet 2007), and becomes even more relevant to the political philosophy of redistributive institutions (Fleurbaey 2007). It turns out that the egalitarian-equivalence approach is very convenient for the definition of fine-grained orderings of allocations, which provides an additional argument in its favor.

7. Related topics

7.1 Freedom and Rights

Sen (1970b) and Gibbard (1974) propose, within the framework of social choice, paradoxes showing that it may not be easy to rank alternatives when some individuals have a special right to rank some alternatives that differ only in matters belonging to their private sphere, and when their preferences are sensitive to what happens in other individuals' private sphere. For instance, as an illustration of Gibbard's paradox, individuals have the right to choose the color of their shirt, but, in terms of social ranking, should A and B wear the same color or different colors, when A wants to imitate B and B wants to have a different color? There is a huge literature on this topic, and after Gaertner, Pattanaik and Suzumura (1992), who argue that no matter what choice A and B make, their rights to choose their own shirt is respected, a good part of it examines how to describe rights properly. The framework of game forms is an interesting alternative to the social choice model. Recent surveys can be found in Arrow, Sen and Suzumura (1997, vol. 2).

Apart from this formal analysis of rights, economic theory is not very well connected to libertarian philosophy, since economic models show that, apart from the very specific context of perfect competition with complete markets, perfect information, no externalities and no public goods, the laisser-faire allocation is typically inefficient and arbitrarily unequal. Therefore libertarian philosophers do not find much help or inspiration in economic theory, and there is little cross-fertilization in this area.

7.2 Capabilities

The capability approach, developed in Sen (1985, 1992), is a particular response to the “equality of what” debate, and is presented by Sen as the best way to think about the relevant interpersonal comparisons to be made for evaluations social situations at the bar of distributive justice. It is often presented as intermediate between resourcist and welfarist approaches, but it is perhaps accurate to present it as more general. A “functioning” is any doing or being in the life of an individual. A “capability set” is the set of functioning vectors that an individual has access to. This approach has attracted a lot of interest in particular because it makes it possible to take into account all the relevant dimensions of life, in contrast with the resourcist and welfarist approaches which can be criticized as too narrow.

Being so general, the approach needs to be specified in order to inspire original applications. The body of empirical literature that takes inspiration from the capability approach is now numerically impressive. As noted in Robeyns (2006) and Schokkaert (2007b), in many cases the empirical studies are essentially similar, but for terminology, to the sociological studies of living conditions. But there are more original applications, e.g., when an evaluation of development programs that takes account of capabilities is contrasted with cost-benefit analysis (Alkire 2002) or when a list of basic capabilities is enshrined in a theory of what a just society should provide to all citizens (Nussbaum 2000). More generally, all studies which seek to incorporate multiple dimensions of quality of life into the evaluation of individual and social situations can be considered, broadly speaking, as pertaining to this approach.

Two central questions pervade the empirical applications. The first concerns the distinction between capabilities and functionings. The latter are easier to observe because individual achievements are more accessible to the statistician than pure potentialities. There is also the normative issue of whether the evaluation of individual situations should be based on capabilities only, viewed as opportunity sets, or should take account of achieved functionings as well. The second central question is the index problem, which has also been raised about Rawls' theory of primary goods. There are many dimensions of functionings and capabilities and not all of them are equally valuable. The definition of a proper system of weights has appeared problematic in connection to the difficulties of social choice theory.

Recent surveys on this approach and its applications can be found in Basu and Lopez-Calva (2004), Kuklys (2005), Robeyns (2006), Robeyns and Van der Veen (2007).

7.3 Marxism

Roemer (1982, 1986c) proposes a renewed economic analysis of Marxian concepts, in particular exploitation. He shows that, even if the theory of labor value is flawed as a causal theory of prices, it may be consistently used in order to measure exploitation and analyze the correlation between exploitation and the class status of individuals. However, he considers that this concept of exploitation is ethically not very appealing, since it roughly amounts to requiring individual consumption to be proportional to labor, and he suggests a different definition of exploitation, in terms of undue advantage due to unequal distribution of some assets. This leads him eventually to merge this line of analysis with the general stream of egalitarian theories of justice. The idea that consumption should be proportional to labor has also received some attention in the theory of fair allocation (Moulin 1990, Roemer & Silvestre 1993). See Roemer (1986a) for a collection of philosophical and economic essays on Marxism.

7.4 Opinions

In normative economics, theorists have often been wary of relying on concepts which are disconnected from the layman's intuition. Questionnaire surveys, usually performed among students, have indeed given some disturbing results. Welfarist approaches have been questioned by the results of Yaari and Bar Hillel (1984), the Pigou-Dalton principle has been critically scrutinized by Amiel and Cowell (1992), the principles of compensation and reward have obtained mixed support in Schokkaert and Devooght (1998), etc. It is of course debatable how much theorists can learn from such results (Bossert 1998).

Surveys of this questionnaire approach are available in Schokkaert and Overlaet (1989), Amiel and Cowell (1999), Schokkaert (1999). Philosophers have also performed similar inquiries (Miller 1992).

7.5 Altruism and Reciprocity

It is standard in normative economics, as in political philosophy, to evaluate individual well-being on the basis of self-centered preferences, utility or advantage. Feelings of altruism, jealousy, etc. are ignored in order not to make the allocation of resources depend on the contingent distribution of benevolent and malevolent feelings among the population (see e.g. Goodin 1986, Harsanyi 1982). It may be worth mentioning here that the no-envy criterion discussed above has nothing to do with interpersonal feelings, since it is defined only with self-centered preferences. When an individual “envies” another in this particular sense, he simply prefer the other's consumption to his own, but no feeling is involved (he might even not be aware of the existence of the other individual).

But positive economics is quite relevantly interested in studying the impact of individual feelings on behavior. Homo œconomicus may be rational without being narrowly focused on his own consumption. The analysis of labor relations, strategic interactions, transfers within the family, generous gifts require a more complex picture of human relations (Fehr and Fischbacher 2002). Reciprocity, in particular, seems to be a powerful source of motivation, leading individuals to incur substantial costs in order to reward nice partners and punish faulty partners (Fehr and Gachter 2000). For an extensive survey of this branch of the economic literature, see Gérard-Varet, Kolm and Mercier-Ythier (2004).

7.6 Happiness studies

The literature on happiness has surged in the last decade. The findings are well summarized in many surveys (see in particular Diener (1994, 2000), Diener et al. (1999), Frey and Stutzer (2002), Kahneman et al. (1999), Kahneman and Krueger (2006), Layard (2005), Oswald (1997), Van Praag and Ferrer-i-Carbonell 2008), and reveal the main factors of happiness: personal temperament, health, social connections (in particular being married and employed). In contrast the impact of material wealth appears moderate, and more a matter of relative position than of absolute comfort, at least above a minimal level of affluence.

A hotly debated question is what to make of this approach in welfare economics. There is wide variety of positions, from those who propose to measure and maximize national happiness (Diener 2000, Kahneman et al. 2004, Layard 2005) to those who firmly oppose this idea on various grounds (Burchardt 2006, Nussbaum 2008, among others). There seems to be a consensus on the idea that happiness studies suggest a welcome shift of focus, in social evaluation, from purely materialistic performances to a broader set of values. Above all, one can consider that the traditional suspicion among economists about the possibility to measure subjective well-being should be assuaged by the recent progress.

However, the fact that subjective well-being can be measured does not imply that it ought to be taken as the metric of social evaluation. Surprisingly, the literature on happiness refers very little to the lively philosophical debates of the previous decades about welfarism, and in particular the criticisms raised by Rawls (1982) and Sen (1985) against utilitarianism. (Two exceptions are Burchardt (2006) and Schokkaert (2007). Layard (2005) also mentions and quickly rebuts some of the arguments against welfarism.) Nonetheless, one of the key elements of that earlier debate, namely, the fact that subjective adaptation is likely to hide objective inequalities, shows up in the data, challenging happiness specialists. Subjective well-being seems relatively immune in the long run to many aspects of objective circumstances, individuals displaying a remarkable ability to adapt. After most important life events, satisfaction returns to its usual level and the various affects return to their usual frequency. If subjective well-being is not so sensitive to objective circumstances, should we stop caring about inequalities, safety, and productivity?

Bibliography

- Alkire S. 2002, Valuing Freedoms: Sen's Capability Approach and Poverty Reduction, New York: Oxford University Press.

- Amiel Y., F.A. Cowell 1992, “Measurement of income inequality: Experimental tests by questionnaire”, Journal of Public Economics 47: 3-26.

- Amiel Y., F.A. Cowell 1999, Thinking about inequality. Personal judgment and income distributions, Cambridge: Cambridge University Press.

- Arrhenius G. 2000, “An impossibility theorem for welfarist axiologies”, Economics and Philosophy 16: 247-266.

- Arrow K.J. 1951, Social Choice and Individual Values, New York: Wiley. 2nd ed., 1963.

- Arrow K.J. 1983, “Contributions to Welfare Economics”, in E.C. Brown, R.M. Solow (eds.), Paul Samuelson and Modern Economic Theory, New York: McGraw-Hill.

- Arrow K.J., A.K. Sen, K. Suzumura (eds.) 1997, Social Choice Re-examined, 2 vol., International Economic Association, New York: St Martin's Press and London: Macmillan.

- Arrow K.J., A.K. Sen, K. Suzumura (eds.) 2002, Handbook of Social Choice and Welfare, vol. 1, Amsterdam: Elsevier-North-Holland.

- d'Aspremont C. 1985, “Axioms for Social Welfare Orderings”, in Hurwicz, Schmeidler, Sonnenschein (eds.).

- d'Aspremont C., L. Gevers 1977, “Equity and the informational basis of collective choice”, Review of Economic Studies 44: 199-210.

- d'Aspremont C., L. Gevers 2002, “Social welfare functionals and interpersonal comparability”, in Arrow, Sen, Suzumura (eds.).

- Atkinson A.B. 1970, “On the measurement of inequality”, Journal of Economic Theory 2: 244-263.

- Atkinson A.B. 2001, “The strange disappearance of welfare economics”, Kyklos 54: 193-206.

- Atkinson A.B., F. Bourguignon (eds.) 2000, Handbook of Income Distribution, vol. 1, Amsterdam: Elsevier-North-Holland.

- Auerbach A.J., M. Feldstein (eds.) 1987, Handbook of Public Economics, vol. 2, Amsterdam: North-Holland.

- Barry B. 1989, Theories of justice, Oxford: Clarendon Press.

- Barry B. 1995, Justice as Impartiality, Oxford: Clarendon Press.

- Basu K., L. Lopez-Calva 2004, “Functionings and capabilities”, forth. in K.J. Arrow, A.K. Sen, K. Suzumura (eds.), Handbook of Social Choice and Welfare, vol. 2, Amsterdam: North-Holland.

- Ben Porath E., I. Gilboa, D. Schmeidler 1997, “On the measurement of inequality under uncertainty”, Journal of Economic Theory 75: 194-204.

- Bergson A. 1938, “A reformulation of certain aspects of welfare economics”, Quarterly Journal of Economics 52: 310-334.

- Black D. 1958, The Theory of Committees and Elections, Cambridge: Cambridge University Press.

- Blackorby C., W. Bossert, D. Donaldson 1997, “Critical-level utilitarianism and the population-ethics dilemma”, Economics and Philosophy 13: 197-230.

- Blackorby C., W. Bossert, D. Donaldson 2005, Population Ethics, Cambridge: Cambridge University Press.

- Blackorby C., D. Donaldson 1990, “A review article: The case against the use of the sum of compensating variations in cost-benefit analysis”, Canadian Journal of Economics 23: 471-494.

- Boadway R., N. Bruce 1984, Welfare Economics, Oxford: Basil Blackwell.

- Boadway R., M. Keen 2000, “Redistribution”, in Atkinson, Bourguignon (eds.).

- Bossert W. 1998, “Comment”, in Laslier et al. (eds.).

- Bossert W., J.A. Weymark 2000, “Utility in Social Choice”, forthcoming in S. Barberà, P. Hammond, C. Seidl (eds.), Handbook of Utility Theory, vol. 2, Dordrecht: Kluwer.

- Bradley R. 2005, “Bayesian utilitarianism and probability homogeneity”, Social Choice and Welfare 24: 221-251.

- Brams S.J., P.C. Fishburn 2002, “Voting Procedures”, in Arrow, Sen, Suzumura (eds.).

- Broome J. 1991, Weighing Goods. Equality, Uncertainty, and Time, Oxford: Basil Blackwell.

- Broome J. 2004, Weighing Lives, Oxford: Oxford University Press.

- Burchardt T. 2006, “Happiness and social policy: barking up the right tree in the wrong neck of the woods”, Social Policy Review 18: 145-164.

- Chakravarty S.R. 1990, Ethical Social Index Numbers, Berlin: Springer-Verlag.

- Chipman J.S., J.C. Moore 1978, “The New Welfare Economics, 1939-1974”, International Economic Review 19: 547-584.

- Cowell F.A. 2000, “Measurement of inequality”, in Atkinson, Bourguignon (eds.).

- Deschamps R., L. Gevers 1979, “Separability, risk-bearing, and social welfare judgments”, in Laffont (ed.).

- Diamond P.A. 1967, “Cardinal welfare, individualistic ethics, and interpersonal comparisons of utility: A comment”, Journal of Political Economy 75: 765-766.

- Diener E. 1994, “Assessing subjective well-being: Progress and opportunities”, Social Indicators Research 31: 103-157.

- Diener E. 2000, “Subjective well-being: The science of happiness and a proposal for a national index”, American Psychologist 55: 34-43.

- Diener E., E.M. Suh, R.E. Lucas, H.L. Smith 1999, “Subjective well-being: Three decades of progress”, Psychological Bulletin 125: 276-302.

- Drèze J., N.H. Stern 1987, “The theory of cost-benefit analysis”, in Auerbach, Feldstein (eds.).

- Dutta B. 2002, “Inequality, poverty and welfare”, in Arrow, Sen, Suzumura (eds.).

- Dworkin R. 2000, Sovereign Virtue. The Theory and Practice of Equality, Cambridge, Mass.: Harvard University Press.

- Dworkin R. 2002, “Sovereign Virtue revisited”, Ethics 113: 106-143.

- Fehr E., U. Fischbacher 2002, “Why social preferences matter. The impact of non-selfish motives on competition, cooperation and incentives”, Economic Journal 112: 1-33.

- Fehr E., S. Gachter 2000, “Fairness and retaliation: The economics of reciprocity”, Journal of Economic Perspectives 14: 159-181.

- Feiwel G.E. (ed.) 1987, Arrow and the Foundations of the Theory of Economic Policy, New York: New York University Press.

- Fleurbaey M. 1996, Théories économiques de la justice, Paris: Economica.

- Fleurbaey M. 2007, “Social choice and just institutions: New perspectives”, Economics and Philosophy 23: 15-43.

- Fleurbaey M. 2008, Fairness, Responsibility, and Welfare, Oxford: Oxford University Press.

- Fleurbaey M., P. Hammond 2004, “Interpersonally comparable utility”, in S. Barberà, P. Hammond, C. Seidl (eds.), Handbook of Utility Theory, vol. 2, Dordrecht: Kluwer.

- Fleurbaey M., P. Michel 2001, “Intergenerational transfers and inequality aversion”, Mathematical Social Sciences 42: 1-11.

- Fleurbaey M., F. Maniquet 2000, “Compensation and responsibility”, forthcoming in Arrow, Sen, Suzumura (eds.), Handbook of Social Choice and Welfare, vol. 2.

- Fleurbaey M., P. Mongin 2005, “The news of the death of welfare economics is greatly exaggerated”, Social Choice and Welfare 25: 381-418.

- Fleurbaey M., K. Suzumura, K. Tadenuma 2005, “The informational basis of the theory of fairness”, Social Choice and Welfare 24: 311-342.

- Frankfurt H.G. 1987, “Equality as a moral ideal”, Ethics 98: 21-43.

- Frey B., A. Stutzer 2002, Happiness and Economics: How the Economy and Institutions Affect Human Well-Being, Princeton: Princeton University Press.